Let's recap important milestones of the last month.

AI last month

In February, several tech giants made significant strides in the world of Artificial Intelligence. OpenAI launched a subscription service for its ChatGPT platform, while Google, Microsoft, and Apple announced new AI-powered features for their respective platforms. Other notable announcements included the launch of a new generative AI platform for videos and the introduction of several new machine learning frameworks and benchmarks. Additionally, several companies announced partnerships to make AI more accessible, while others secured contracts for their machine learning technology.

- OpenAI introduces ChatGPT Plus, a subscription for ChatGPT. They also released ChatGPT API and Whisper models.

- Google announces FRMT, a benchmark for few-shot region-aware machine translation

- The startup behind Stable Diffusion has launched a generative AI for video

- Google announces Bard, a new chatGPT-like bot and new features in search

- Microsoft releases a new AI-powered Bing and Edge

- Google AI with other researchers introduced FriendlyCore, a Machine Learning framework for computing differentially private aggregations

- Hugging Face and AWS partner to make generative AI more accessible

- NVIDIA unveils GPU-Accelerated AI-on-5G System for Edge AI

- Meta releases LLaMA

- Tesla patents virtualization and machine learning software to improve Full Self Driving

- Apple invents advanced Machine Learning-Enabled Object Detection Algorithms to allow users to customize Memoji to a higher degree

- Nebuly introduces ChatLLaMA, the first open-source ChatGPT-like based on LLaMA

The release of ChatLLaMA is a significant milestone in the field of AI development. By providing an open-source alternative to the traditional training process, Nebuly is making it easier and more accessible for developers to create AI models that are tailored to their users’ needs. As the library is further developed and improved, it has the potential to revolutionize the way that AI assistants are created and deployed.

Back to AI this week.

This section provides links to an interesting development in the field of AI this week.

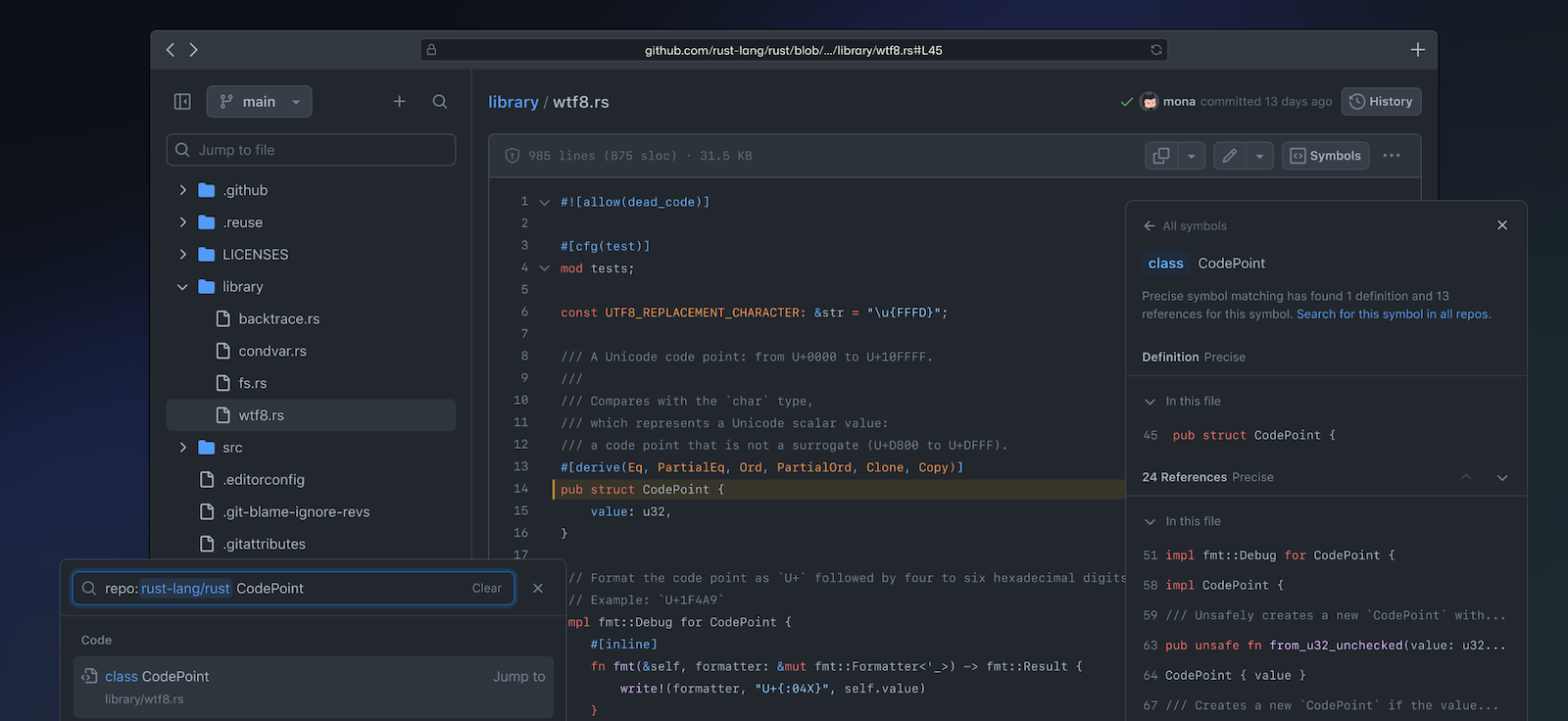

GitHub shares the tech behind new code searches in the following blog.

Search technology is a complex and constantly evolving field, and we are witnessing a significant shift in recent years, as it moves away from simple keyword-based searches towards more advanced and sophisticated semantic search methods. This represents a major step forward in the capabilities and accuracy of search technology, and has the potential to revolutionize the way we find and access information in the digital age.

The technology behind search is complex and constantly evolving, with a shift from traditional keyword-based searches to more advanced semantic search methods. To meet the unique needs of content hosted on GitHub, a whole new approach to search was required, prompting the creation of a bespoke search solution. The following blog talks about their initiatives on search. Very insightful and highly recommended.

Let's shift the focus from search to images now.

Stable Diffusion is a recently developed generative model that has shown remarkable results in image generation tasks. The revolution brought by Stable Diffusion doesn't seem to be stopping.

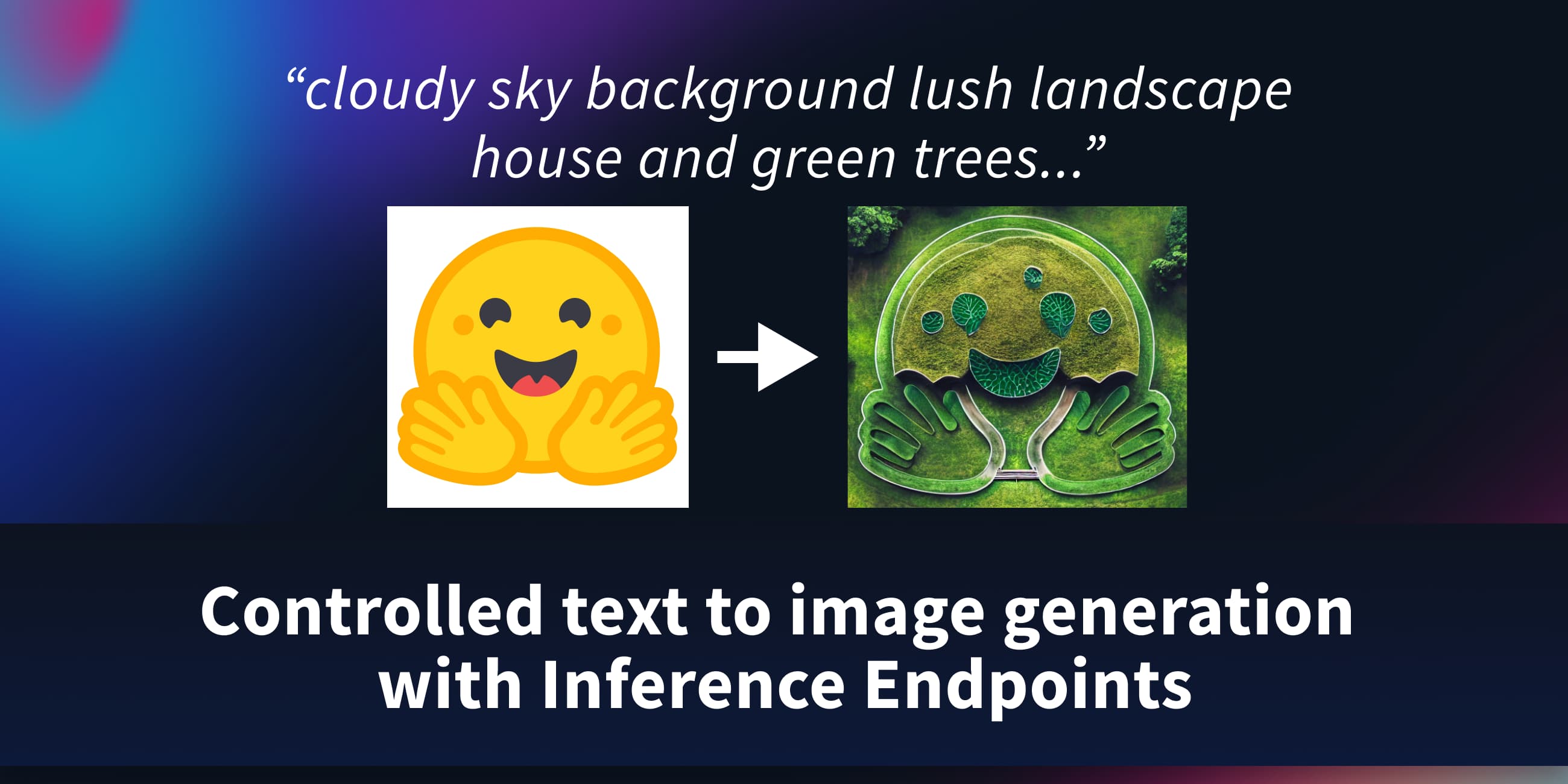

However, one of the main challenges in using this model is achieving controlled image generation, where the user can specify certain attributes or characteristics of the generated images.

The following work talks about ways to achieve that.

- MultiDiffusion is a framework that enables versatile and controllable image generation using a pre-trained text-to-image diffusion model without further training. It can generate high-quality and diverse images that adhere to user-provided controls, such as aspect ratio and spatial guiding signals.

- SpaText is a new method for text-to-image generation that allows fine-grained control over the shapes and layouts of different objects in an image using open-vocabulary scene control. The method leverages large-scale text-to-image datasets and a novel CLIP-based spatio-textual representation to achieve state-of-the-art results on image generation with free-form textual scene control.

- Composer is a new generation paradigm that offers flexible control over the output image by decomposing the image into representative factors and training a diffusion model with these factors as conditions. This approach supports various levels of conditions and allows for customizable content creation while maintaining synthesis quality and model creativity. It introduces a 5 billion parameter controllable diffusion model for more controllable image generation.

- ControlNet, a neural network structure to control diffusion models by adding extra conditions, is also used to generate images with more control. With this users can easily define the generation with spatial contexts like a depth map, a segmentation map, a scribble, keypoints, and so on!

Read the following blog by Philipp Schmid from hugging face and build one for yourself.

Videos this week

Following are some of the videos that I watched this week and found worth sharing.

The two-minute paper video talks about DreamX, an AI video generator. Must watch and see what else is around the corner.

We recently discussed LLaMA. Yannic covers the topic in further detail in thefollowing video .

Last week we started a series on GPT. If you are curious about these language models and want to have a good summary of them, the following video can be very helpful. Here Prof. Christopher Potts describes the fundamental building blocks of these systems and how we can reliably assess and understand them.

Events

Signup to stay updated

We have our next monthly meetup around building an AI-powered content management system. The newer methodologies like text content creator, image builder etc will be used to suggest how Generative CMS can be built.

At this event, attendees will learn how to enhance popular open-source CMS tools such as WordPress, Drupal and Joomla, by integrating generative AI. We will showcase how this integration can lead to a more personalized, efficient and user-friendly experience for both content creators and consumers.

Don't miss this opportunity to expand your knowledge, network with the community and take away valuable insights on how generative AI can revolutionize the way we use popular open-source CMS tools.

To attend this event, please signup at the below-mentioned link.

In case you missed

Last Week's Posts

Acknowledgement: Thanks to Dr. Gaurav Sood for his contribution to improving the newsletter.

Do follow us on our social media where we post micro-byte information daily.

YouTube: http://youtube.com/@everydayseries

TikTok: https://www.tiktok.com/@everydayseries

Instagram: http://instagram.com/everydayseries_

Twitter: http://twitter.com/everydaseries_

We research, curate and publish regular updates from the field of AI.

Consider becoming a paying subscriber to get the latest!