Hochreiter, S. and Schmidhuber, J., 1997. Long short-term memory. Neural computation, 9(8), pp.1735-1780.

Link: https://ieeexplore.ieee.org/abstract/document/6795963

Notes:

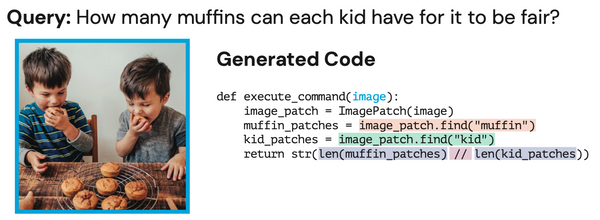

The 1997 research paper "Long Short-Term Memory" by Sepp Hochreiter introduced the Long Short-Term Memory (LSTM) algorithm, a type of recurrent neural network (RNN) that is able to effectively handle the problem of vanishing gradients in traditional RNNs. LSTMs are able to maintain a "memory" of past information and selectively choose which information to keep or discard, allowing them to effectively learn long-term dependencies in sequential data. This paper is considered a seminal work in the field of deep learning and LSTMs has since been used in a wide range of applications, including natural language processing, speech recognition, and time series forecasting.

- Learning to store information over extended time intervals by recurrent backpropagation takes a very long time, mostly because of insufficient, decaying error backflow.

- They reviewed the 1991 paper by Hochreiter and then introduced a novel, efficient, gradient-based method called long short-term memory (LSTM).

- Truncating the gradient where this does not do harm, LSTM can learn to bridge minimal time lags in excess of 1000 discrete-time steps by enforcing constant error flow through constant error carousels within special units. The following video at the end of the post explains this and the above point really well.

- LSTM is local in space and time and author has used local, distributed, real-valued, and noisy pattern representations for it. They shown the advantaged of LSTM over real-time recurrent learning, back propagation through time, recurrent cascade correlation, Elman nets, and neural sequence chunking. It was truly new innovation at that time.

- Section 5 is the most interesting one where they have shared the experimental results. Their tasks included : 1. Learning embedded grammar. 2. noise-free sequence with long time lag. 3. noise mixed with signal on same channel. 4. adding problem (never solved by any other recurrent network). 5. multiplication problem. 6. temporal order (artificial but again not solved by any other RN).

More details:

The paper begins by introducing the problem of vanishing gradients in traditional RNNs and the difficulty of training such networks to learn long-term dependencies in sequential data. It covers review of 1991 paper by same author. It then describes the LSTM architecture, including the memory cell and the three gates that control the flow of information into and out of the memory cell. The authors also explain how the LSTM architecture can be used to overcome the vanishing gradient problem by allowing the network to selectively choose which information to keep or discard.

The paper also includes experimental results which demonstrate the effectiveness of the LSTM architecture on a variety of tasks. They trained LSTM networks on various tasks. The results showed that the LSTM network was able to learn long-term dependencies and outperform traditional RNNs on both tasks.

Additionally, the paper also discusses the potential of LSTMs to be used in other applications, such as speech recognition and time series prediction.

Overall, the paper presents a clear and thorough explanation of the LSTM architecture and its advantages over traditional RNNs and provides evidence of its effectiveness through experimental results. It was a breakthrough paper in the field of deep learning and recurrent neural networks.

Online Resource:

Another paper by the same author recommeded to read:

Hochreiter, S., Bengio, Y., Frasconi, P. and Schmidhuber, J., 2001. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies.

We research, curate and publish daily updates from the field of AI.

Consider becoming a paying subscriber to get the latest!