This post is for subscribers only

Sign up now to read the post and get access to the full library of posts for subscribers only.

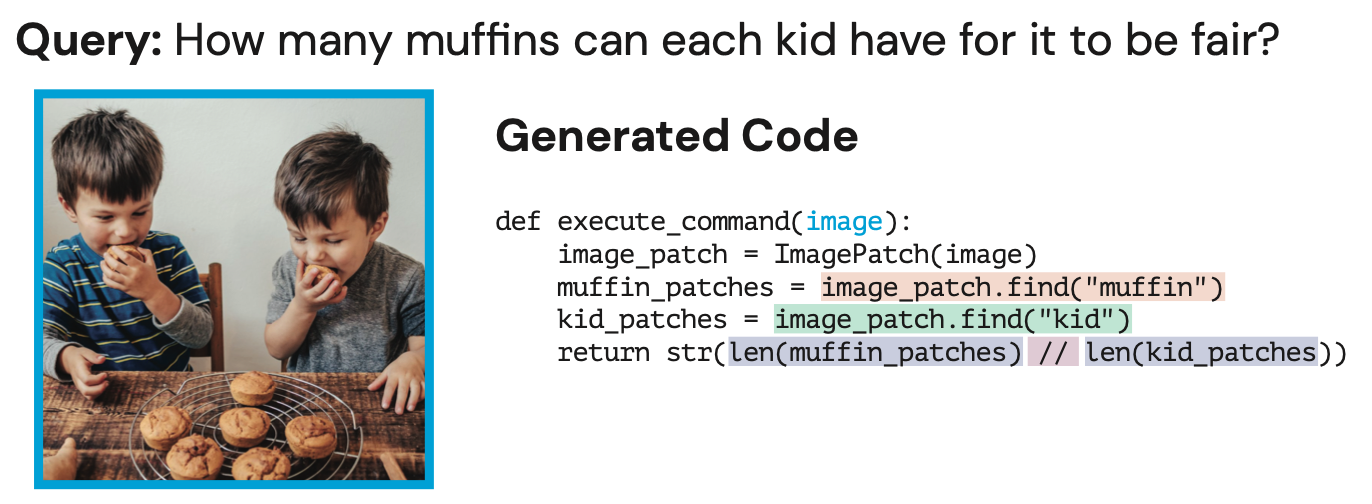

Sign up now Already have an account? Sign inUnlocking the Power of AI to Understand and Answer Complex Questions About Images and Videos

Sign up now to read the post and get access to the full library of posts for subscribers only.

Sign up now Already have an account? Sign in