Welcome to Everyday Series, your go-to source for the latest developments and news in the world of Artificial Intelligence!

With so much happening in the world of Generative AI, how can we not stay unaffected? We have updated our website with Generative AI tools for users to increase their productivity.

You may see an updated landing page, newer tools released every day, and a completely rebranded website with many new features. I will be sending a detailed blog about Generative AI at Everyday Series and how can you take advantage of it.

So I can now say:

Welcome to Everyday Series, your go-to source for the latest developments, news and Productive AI tools in the world of Artificial Intelligence!

Here's what happened in the last week:

The world of Artificial Intelligence is moving at an incredibly fast pace, and recent developments have proven just how quickly things are advancing. It used to take months for new AI technologies to be developed and released, but now it's happening in a matter of days.

It all started with ChatGPT, an AI model with remarkable conversational competency and reasoning capabilities across many domains. Then, just weeks later, Facebook released LLaMA, an open-source large language model that can run locally without censorship.

But the speed of development has only increased since then. Just a few days ago, someone accidentally released LLaMA on BitTorrent, and others quickly built solutions to run it on Macbook, Windows, and Raspberry Pi. Then, Stanford University developed a better version.

Stanford researchers were able to train a language model from Meta using text generated by OpenAI's GPT-3.5 for less than $600. This resulted in similar performance as the language models developed by big tech companies which can be quite expensive. The researchers used thousands of demonstrations generated by OpenAI's GPT-3.5 to fine-tune a variant of Meta's LLaMA model, which has seven billion parameters. This shows that it's possible for smaller research groups to develop powerful language models without breaking the bank.

And the pace of development shows no signs of slowing down. Google has just launched its generative AI suite, Microsoft launched Co-pilot and OpenAI released GPT-4, their latest milestone in scaling up deep learning. The speed does not seem to slow down. A new paper which talked about AI which understands videos and images came out.

The message is clear: in the world of AI, you snooze, you lose.

OpenAI has unveiled GPT-4, a multimodal model that accepts image and text inputs, emitting text outputs. While it may be less capable than humans in many real-world scenarios, it exhibits human-level performance on various professional and academic benchmarks. What's more, it's 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on internal evaluations.

Meanwhile, researchers at NTT Device Technology Labs and the University of Tokyo have developed a training algorithm that goes a long way towards letting unconventional machine learning systems meet their promise. Their results, established on an optical analogue computer, represent progress towards obtaining the potential efficiency gains that researchers have long sought from "unconventional" computer architectures.

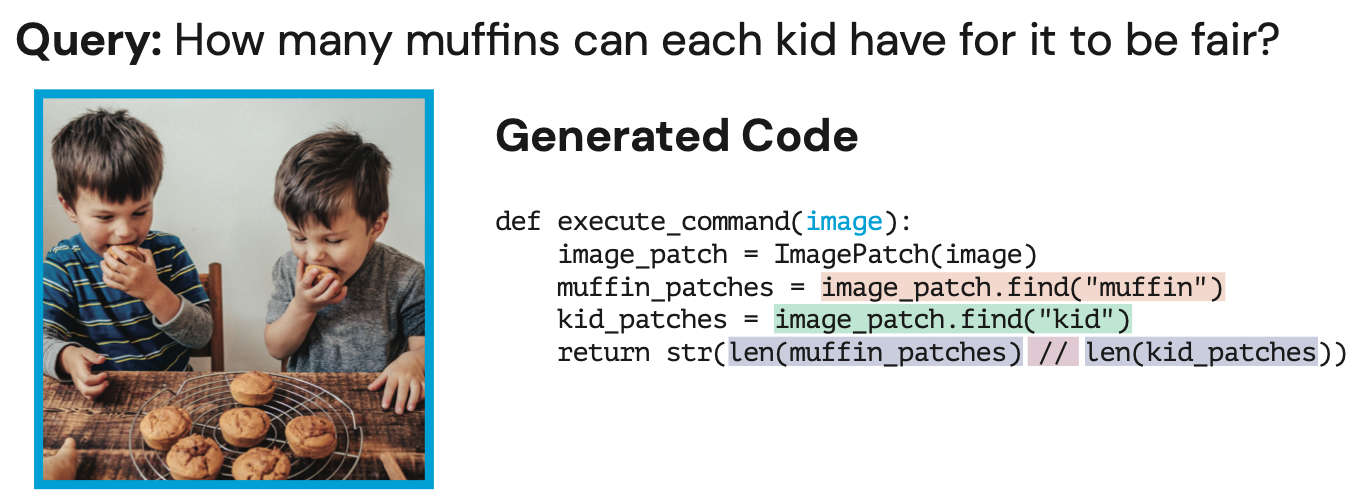

AI models like ChatGPT have remarkable conversational competency and reasoning capabilities across many domains. However, it's currently incapable of processing or generating images from the visual world. To address this issue, a system called Visual ChatGPT has been developed, incorporating different Visual Foundation Models, to enable the user to interact with ChatGPT by sending and receiving not only languages but also images, providing complex visual questions or visual editing instructions that require the collaboration of multiple AI models with multi-steps.

NVlabs has published Prismer, a Vision-Language Model with Multi-Modal Experts, achieving state-of-the-art performance on visual question answering and image captioning.

Meanwhile, IBM and Stanford have proposed UDAPDR, an unsupervised domain adaptation via LLM prompting and distillation of rankers to improve domain adaptation under annotation constraints and domain shifts.

Also, Google has trained and released FLAN-UL2, a 20-billion parameter transformer that outperforms FLAN-T5 by a relative of ~4%. In “Scaling Instruction-Finetuned language models," the key idea is to train a large language model on a collection of datasets, phrased as instructions, which enable generalization across diverse tasks.

An interesting gem that I discovered this week:

I packed-up a full-text paper scraper, vector database, and LLM into a CLI to answer questions from only highly-cited peer-reviewed papers. Feels unreal to be able instantly get answers by an LLM "reading" dozens of papers. 1/2 pic.twitter.com/a6PWxWyuc1

— Andrew White ⏣🧪 (@andrewwhite01) February 25, 2023

How To's

How to's of the week

This section covers the AI tools available in everyday series.

Events

Signup to stay updated

We have our next monthly meetup around building an AI-powered content management system. The newer methodologies like text content creator, image builder etc will be used to suggest how Generative CMS can be built.

At this event, attendees will learn how to enhance popular open-source CMS tools such as WordPress, Drupal and Joomla, by integrating generative AI. We will showcase how this integration can lead to a more personalized, efficient and user-friendly experience for both content creators and consumers.

Don't miss this opportunity to expand your knowledge, network with the community and take away valuable insights on how generative AI can revolutionize the way we use popular open-source CMS tools.

To attend this event, please signup at the below-mentioned link.

In case you missed

Last Week's Posts

Do follow us on our social media where we post micro-byte information daily.

YouTube: http://youtube.com/@everydayseries

TikTok: https://www.tiktok.com/@everydayseries

Instagram: http://instagram.com/everydayseries_

Twitter: http://twitter.com/everydaseries_

We research, curate and publish regular updates from the field of AI.

Consider becoming a paying subscriber to get the latest!