This paper shines a light on the impressive capabilities of large language models like GPT-3, which can learn and perform various natural language understanding tasks with minimal training data and little to no fine-tuning. The authors demonstrate that they can do an impressive job in many natural language understanding tasks with minimal to no fine-tuning, sometimes even outperforming other specialized models! These findings open up exciting new possibilities for how we use these language systems and further increase their utility.

In a traditional machine learning setting, mastering the task at hand requires an abundance of labelled training data. But few-shot learning seeks to break that mould by doing more with less! This approach is particularly helpful in natural language understanding where datasets can be scarce - it allows us to train models on minimal amounts of input so we don't miss out on any valuable insights about our problem domain. In this paper, authors explore how far few-shot methods have come and what potential applications may lie ahead for this innovative technology.

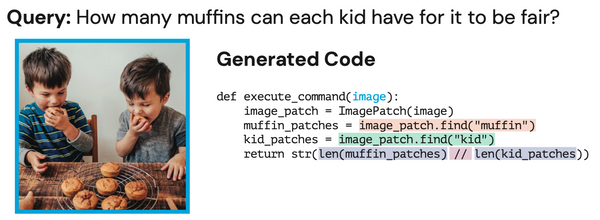

The authors take a deep dive into the few-shot learning capabilities of large language models and put several state-of-the-art ones, including GPT3, to the test. Not only did they test these models on real-world scenarios such as translating languages, and answering questions related to sentiment analysis or text classification - but also got creative with specific tasks like understanding idioms and recognizing sarcasm!

GPT-3 and other large language models have shown their extraordinary capacity to learn quickly, often matching or outperforming traditional methods! This suggests that these models possess impressive knowledge transfer capabilities – the ability to easily apply learned information from one task in order to complete a new challenge. These findings open up exciting possibilities for future applications of machine learning.

The authors were intrigued by the incredible few-shot learning ability of these models, so they decided to delve into their internal representations. Through analyzing high-quality data sets and leveraging their underlying structure, these models gained a deep understanding of natural language! This knowledge allowed them to quickly adapt and perform well across various tasks; an impressive feat that required significantly less training than most other machine learning algorithms.

This paper has exciting implications for natural language processing systems. It suggests that large-scale models may be able to learn from smaller datasets, making it much easier to create efficient new tools for understanding written and spoken words. The study also points out how these research breakthroughs could open the door to a variety of applications - no extra tuning required!

This groundbreaking paper challenges the notion that natural language processing systems can only work effectively and efficiently with large training datasets. Instead, "Language Models are Few-Shot Learners" highlights research showing how advanced models can achieve impressive results on a range of tasks using minimal data - a monumental development for future generations seeking dependable NLP solutions!

If you are looking to read the original paper, you can do so here!

Below is a quick glossary of some of the terms used in the paper -

· Few-shot learning: The ability of a model to learn to perform a task with a small amount of task-specific training data. This is in contrast to traditional machine learning, where a model requires a large amount of labelled data to perform well on a task.

· Language model: A type of AI model that is trained to generate natural language text. These models are typically trained on large amounts of text data and can be used for a wide range of natural language understanding tasks, such as language translation, question answering, and text classification.

· GPT-3: Generative Pre-trained Transformer 3 is a state-of-the-art language model developed by OpenAI that has been trained on a massive amount of text data.

· Natural language understanding: The ability of an AI model to understand and interpret human language. This encompasses a wide range of tasks, such as language translation, question answering, sentiment analysis, and text classification.

· Fine-tuning: The process of adapting a pre-trained model to a specific task by training it on a small amount of task-specific data. Fine-tuning is often used to improve the performance of a pre-trained model on a specific task.

· Transfer learning: The ability of a model to transfer knowledge learned from one task to another.

· Idiomatic expressions: Phrases that cannot be understood based on the meanings of the individual words, but rather by the phrase as a whole.

· Sarcasm recognition: The ability of a model to recognize when someone is being sarcastic in their language.

· Internal representations: The representations of the model's knowledge, learned during the training process.

· Labeled data: Data that has been labelled, or marked, with the correct output for a specific task.

Explain Like I am five

Imagine you are a robot that can talk and understand many different things people say. But you're not super good at any one thing yet, like writing stories or answering questions. But then some grown-ups show you a few examples of how to do those things, and you learn how to do them really well! Just like how you learn how to do new things in school with just a few examples. This is what the paper is saying, that big robots like GPT are really good at learning new things quickly, just like you.

We research, curate and publish daily updates from the field of AI.

Consider becoming a paying subscriber to get the latest!