Over the last decade, Google has been at the forefront of AI breakthroughs. Their work in foundation models has become the bedrock for the industry and the AI-powered products that billions of people use daily.

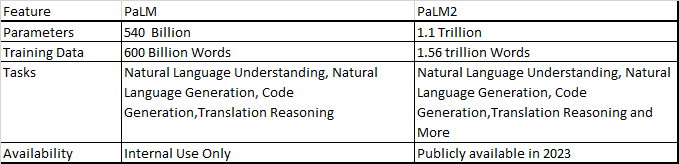

PaLM stands for the Pathways Language Model. It was introduced to create a single model capable of generalizing across domains and tasks while maintaining efficiency. It is a powerful language model trained on an enormous dataset consisting of 540 billion parameters. PaLM has demonstrated remarkable capabilities in language comprehension, reasoning, and even code-related tasks, marking a significant leap forward in the field.

Key differences between PaLM and PaLM 2

Google has announced its latest language model, PaLM 2, which has improved multilingual, reasoning, and coding capabilities. It is a faster and more efficient model and comes in a variety of sizes, from Gecko, which is lightweight and can work on mobile devices, to Unicorn, the largest model.

PaLM 2 has been designed to be versatile and can be used for various use cases. The model is powering over 25 Google products and features, including Workspace features, Med-PaLM 2, and Sec-PaLM. The model is also available for developers to use and is powering Duet AI for Google Cloud.

PaLM 2 is heavily trained on multilingual text, spanning more than 100 languages, which has significantly improved its ability to understand, generate, and translate nuanced text across a wide variety of languages. It even passes advanced language proficiency exams at the “mastery” level.

PaLM 2’s wide-ranging dataset includes scientific papers and web pages that contain mathematical expressions, resulting in improved capabilities in logic, common sense reasoning, and mathematics. Additionally, it was pre-trained on a large quantity of publicly available source code datasets, excelling at popular programming languages like Python and JavaScript while also generating specialized code in languages like Prolog, Fortran, and Verilog.

It is faster and more efficient than previous models and comes in four sizes, from Gecko to Unicorn. This versatility makes it easy to deploy for a wide range of use cases, even working on mobile devices with Gecko's lightweight model.

PaLM 2’s improved multilingual capabilities are allowing Google to expand Bard to new languages, and it’s powering their recently announced coding update.

Workspace features to help users write in Gmail and Google Docs, and organize in Google Sheets are all tapping into the capabilities of PaLM 2 at a speed that helps people work better and faster. Med-PaLM 2, trained by Google’s health research teams with medical knowledge, achieves state-of-the-art results in medical competency and will open up to a small group of Cloud customers for feedback later this summer to identify safe, helpful use cases.

Sec-PaLM is a specialized version of PaLM 2 trained on security use cases, available through Google Cloud, using AI to analyze and explain the behavior of potentially malicious scripts.

Developers can now sign up to use the PaLM 2 model, or customers can use the model in Vertex AI with enterprise-grade privacy, security, and governance. PaLM 2 is also powering Duet AI for Google Cloud, a generative AI collaborator designed to help users learn, build and operate faster than ever before.

PaLM 2 shows us the impact of highly capable models of various sizes and speeds, and that versatile AI models reap real benefits for everyone. Just as Google is committed to releasing the most helpful and responsible AI tools today, they're also working to create the best foundation models yet for Google.

Building PaLM 2

PaLM 2 was built by combining three distinct research advancements in large language models:

Compute-optimal scaling: This technique scales the model size and the training dataset size in proportion to each other, resulting in a smaller but more efficient model with better overall performance.

Improved dataset mixture: PaLM 2's pre-training dataset is more diverse and multilingual than previous models like PaLM, including hundreds of human and programming languages, scientific papers, and web pages.

Updated model architecture and objective: PaLM 2 has an improved architecture and was trained on a variety of different tasks, allowing it to learn different aspects of language.

Evaluating PaLM 2

PaLM 2 has been evaluated on a range of potential downstream uses, including dialog, classification, translation, and question-answering. The evaluations focused on identifying potential harms and biases, including toxic language and social bias related to identity terms.

In addition, PaLM 2 has built-in control over the toxic generation and improved multilingual toxicity classification capabilities. To ensure responsible AI development and commitment to safety, the pre-training data was analyzed to remove sensitive personally identifiable information and reduce memorization.

PaLM 2 achieves state-of-the-art results on reasoning benchmark tasks like WinoGrande and BigBench-Hard, and is significantly more multilingual than previous models like PaLM, achieving better results on benchmarks like XSum, WikiLingua, and XLSum. It also improves translation capability over PaLM and Google Translate in languages like Portuguese and Chinese.

Overall, PaLM 2's advanced reasoning, multilingual capabilities, and improved performance make it an exciting development in the field of large language models. As AI continues to evolve, responsible development and evaluation will be critical in ensuring the safety and ethical use of these technologies.

Read More

We research, curate and publish daily updates from the field of AI. Paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events and open-source tools.

Consider becoming a paying subscriber to get the latest!