Gated Recurrent Unit (GRU) Neural Network

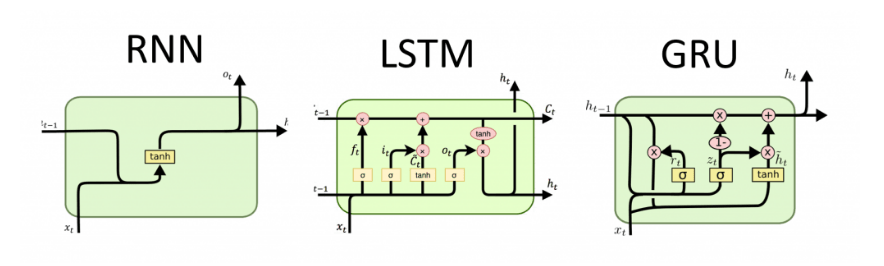

A Gated Recurrent Unit (GRU) is a neural network that can learn long-term dependencies. It is similar to an LSTM, but does not have a memory cell.

A Gated Recurrent Unit (GRU) is a neural network that can learn long-term dependencies. It is similar to an LSTM, but does not have a memory cell.

The long-term dependencies problem is made up of three sub-problems: parsing, learning, and solving.

Something developers are not telling you.

![LSTM Network [RNNs]](/content/images/size/w600/2022/07/Unknown.png)

Long Short Term Memory (LSTM) has been a hot topic in the field of natural language processing (NLP) for several years now.

Exploding and vanishing gradients are two of the most important concepts in modern mathematics. A gradient is a measure of how quickly one variable changes with respect to another.

fastText is a library for efficient learning of word representations and sentence vectors. It was developed at Facebook AI Research (FAIR).

Hello from The Everyday Series! If you like our work, please consider supporting us so we can keep doing what we do. And as a current subscriber, enjoy this nice discount! Get 45% off forever Also: if you haven’t yet, follow us on Twitter, TikTok, or YouTube! GloVe is...

The world this week (In the world of AI).

![Word Co-occurrence Matrix [Example]](https://images.unsplash.com/photo-1491234323906-4f056ca415bc?crop=entropy&cs=tinysrgb&fit=max&fm=jpg&ixid=MnwxMTc3M3wwfDF8c2VhcmNofDJ8fHdvcmRzJTIwbWF0cml4fGVufDB8fHx8MTY1NzkyMTc0NA&ixlib=rb-1.2.1&q=80&w=600)

Yesterday we discussed about Word Co-occurrence Matrix, today we will discuss how to create one.