This week stable diffusion launched their version 2.0.

As compared to the original V1, Stable Diffusion 2.0 offers a number of significant improvements and features

New Text-to-Image Diffusion Models

A brand new encoder (OpenCLIP) was developed by LAION with the assistance of Stability AI for inclusion in the Stable Diffusion 2.0 release. The encoder significantly improves the quality of the generated images when compared to earlier versions of V1. It is possible to create images with 512x512 and 768x768 pixels of resolution using the text-to-image models included in this edition.

Super-resolution Upscaler Diffusion Models

Stable Diffusion 2.0 contains an Upscaler Diffusion model that improves image resolution by a factor of four.

Depth-to-Image Diffusion Model

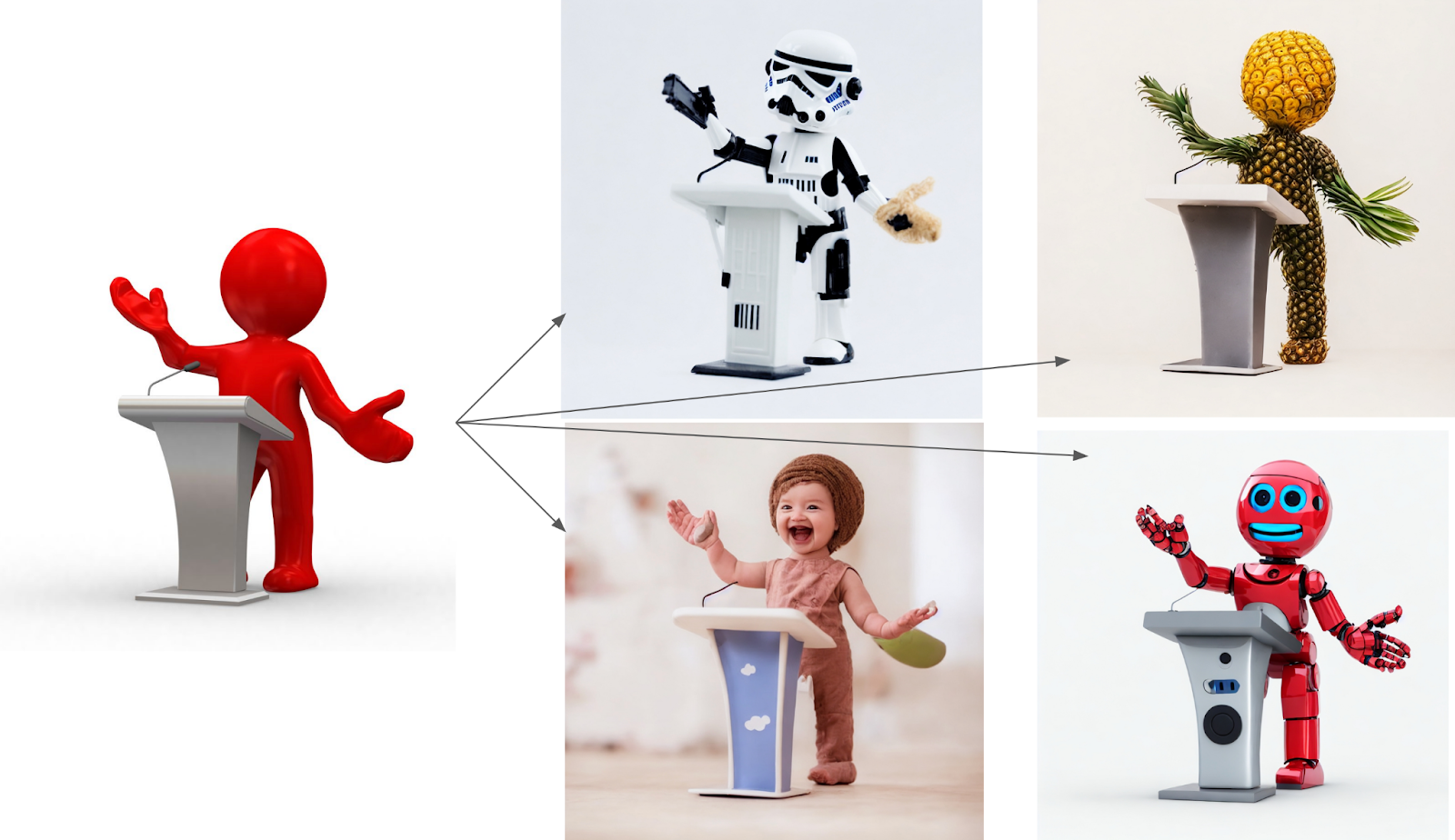

Using both the text and depth information, Depth2img generates new images based on the depth of an input image (using an existing model).

In terms of creative applications, Depth-to-Image can offer a wide range of possibilities, allowing transformations that differ dramatically from the original image while retaining the image's coherence and depth.

Read More:

Original Post:

Do you like our work?

Consider becoming a paying subscriber to support us!