What is SOTA in Artificial Intelligence?

SOTA stands for State-Of-The-Art and refers to the best models used to achieve remarkable results in specific AI tasks. In this blog, we'll explore the concept of SOTA in AI and its significance, with a particular focus on its applications in Natural Language Processing (NLP).

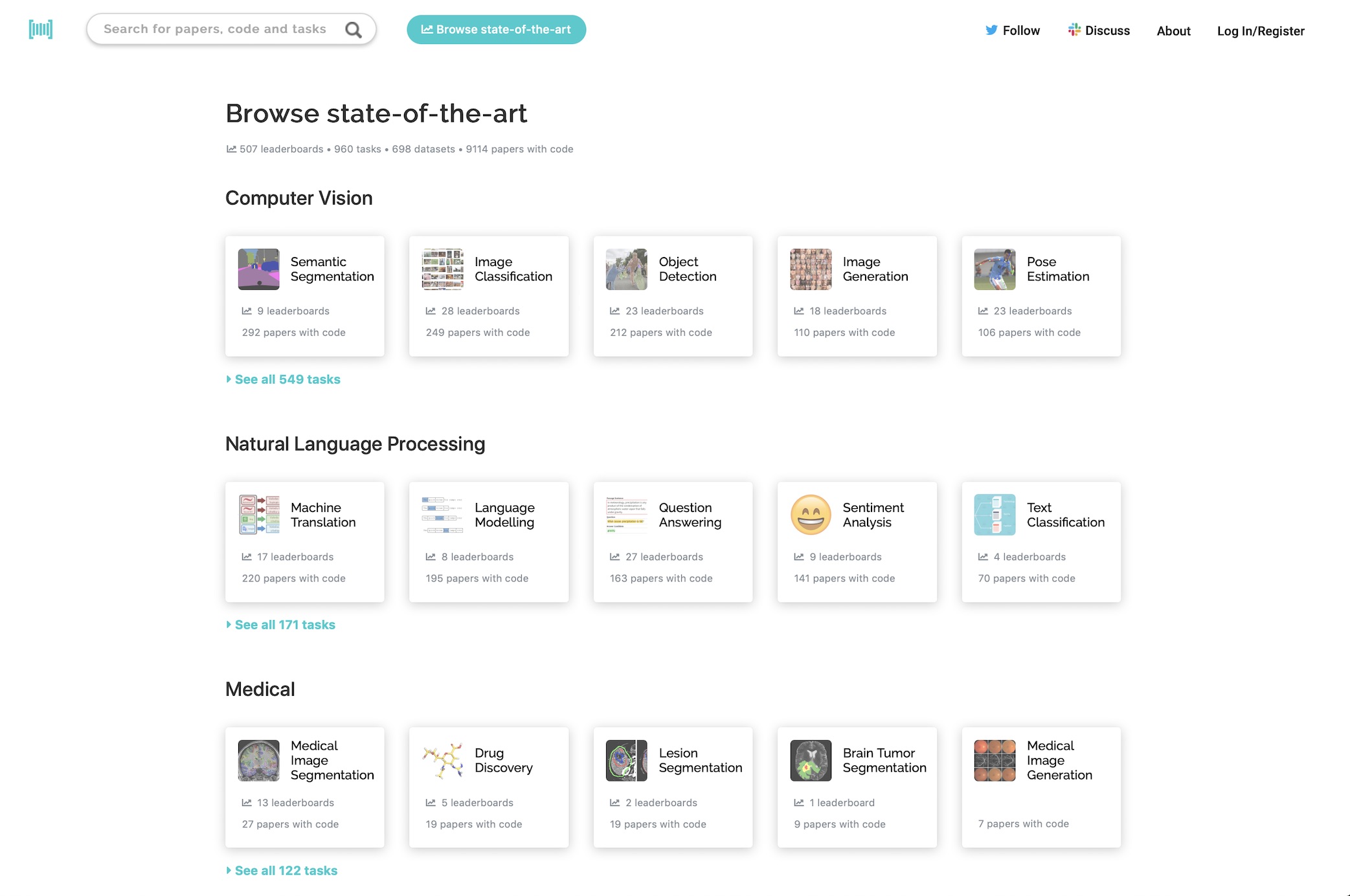

Understanding SOTA in AI

SOTA, as the name suggests, represents the cutting-edge models that push the boundaries of AI performance. These models are specifically tailored for AI tasks, and they can be applied in different areas such as Machine Learning, Deep Neural Networks, Natural Language Processing, and other generic tasks.

The Benefits of SOTA Models in AI

Implementing SOTA models in AI comes with a range of advantages that enhance the overall performance and reliability of AI tasks.

Increases task precision: SOTA models excel in accuracy, which ensures that the AI task aligns closely with the desired outcome. By achieving high scores in performance accuracy metrics (such as recall, precision, or AUC), these models can deliver precise results that meet user requirements.

Enhances reliability: With their high precision, SOTA models provide reliable outcomes, particularly in machine learning and deep neural network tasks. They are not merely random tests but rather trusted tools that consistently produce accurate results. To ensure trustworthiness, running noise experiments on the SOTA model can help measure standard deviation and compare the original results with reproduced ones, validating the algorithm's features.

Ensures reproducibility: Reproducibility is crucial for agile and efficient AI product development. SOTA models facilitate this by allowing quick iterations and the ability to ship minimal viable products (MVPs) to customers promptly. Incorporating user feedback becomes a guiding principle for future product improvements, thanks to the reproducibility of the SOTA model.

Reduces generation time: Reproducibility also contributes to faster product generation. By leveraging existing SOTA model parameters, developers can save significant time compared to starting from scratch. This streamlines the entire process, enabling faster delivery of scalable products.

When to Run SOTA Tests

Running SOTA tests regularly is a fundamental practice in AI, and a weekly frequency is generally recommended. Additionally, it's advisable to conduct SOTA tests when incorporating important changes. Cloud virtual machines and reliable pipelines like Jenkins provide a suitable environment for running these tests efficiently.

Applications of SOTA Models in NLP

SOTA models find applications in various AI activities, and NLP is no exception. Here are a few notable areas where SOTA models have been instrumental:

Object detection by deep neural networks

Single-shot multi-box detectors

Self-adaptive tasks involving variable patterns

This list is by no means exhaustive, as the possibilities of SOTA applications extend to numerous branches of AI. Stay tuned for future blogs to explore further applications of SOTA in every subset of AI.

Notably, SOTA pre-trained models, such as BERT, GPT, ELMo, RoBERTa, XLNet, and ELECTRA, have significantly influenced the field of NLP, delivering state-of-the-art results.

BERT (Bidirectional Encoder Representations from Transformers):

BERT has revolutionized NLP by introducing the concept of bidirectional contextual embeddings.

It has achieved state-of-the-art performance on various NLP tasks, including text classification, named entity recognition, and question answering.

For example, BERT has been used in the SQuAD (Stanford Question Answering Dataset) competition, where it outperformed human-level performance on machine reading comprehension.

GPT (Generative Pre-training Transformer):

GPT is known for its ability to generate coherent and human-like text.

It has been used for tasks like language modeling, text completion, and text generation.

For example, GPT-3, the largest version of the GPT model, has been demonstrated to generate creative stories, write code, and even engage in conversation that is difficult to distinguish from a human.

ELMo (Embeddings from Language Models):

ELMo introduced the concept of contextualized word embeddings, which capture the meaning of a word based on its surrounding context.

It has been widely used for various NLP tasks, including sentiment analysis, named entity recognition, and part-of-speech tagging.

For example, ELMo has been used to improve sentiment analysis models by incorporating contextualized word embeddings, resulting in a better understanding of nuanced sentiment in text.

RoBERTa (Robustly Optimized BERT Pretraining Approach):

RoBERTa is an improved version of BERT, designed to address its limitations and achieve even better performance.

It has been trained on a larger and more diverse dataset, which includes internet text data.

RoBERTa has been successful in various NLP tasks, such as text classification, natural language inference, and machine translation.

For example, RoBERTa has been used in the WMT 2019 Machine Translation Task, where it achieved state-of-the-art performance in translating text between multiple languages.

XLNet (eXtreme Transformer):

XLNet introduced permutation language modeling, which allows the model to consider all possible permutations of the input sequence during training.

It has achieved state-of-the-art performance on various benchmarks, including GLUE (General Language Understanding Evaluation) and SQuAD.

For example, XLNet has been used in the GLUE benchmark, where it surpassed previous models and achieved the highest scores on multiple NLP tasks, including sentiment analysis, textual entailment, and semantic similarity.

ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately):

ELECTRA is trained using an adversarial approach, where it simultaneously trains a generator and a discriminator model.

It has shown impressive results in various NLP tasks, such as text classification, named entity recognition, and text generation.

For example, ELECTRA has been used in the CoNLL 2003 Named Entity Recognition task, where it achieved state-of-the-art performance by accurately recognizing named entities in text.

These SOTA pre-trained models have pushed the boundaries of NLP and continue to drive advancements in the field, enabling breakthroughs in a wide range of applications and improving the overall understanding and generation of human language.

Reference Links

To Know More about the latest developments

We research, curate and publish daily updates from the field of AI. Paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events and open-source tools.

Consider becoming a paying subscriber to get the latest!