Amidst the Christmas cheer and the rustle of wrapping paper, Dr. Ingrid, surrounded by the gifts she had bought from the village market, found her mind wandering to a recent topic of interest: convolutional neural networks (CNNs). She decided to call Dr. Silverman, known for his expertise in the field.

"Hello, Dr. Silverman," Dr. Ingrid began as he answered, "I've been reading about CNNs and I'm particularly curious about nonlinearities and their roles. Could you shed some light on this?"

The Role of Nonlinearities

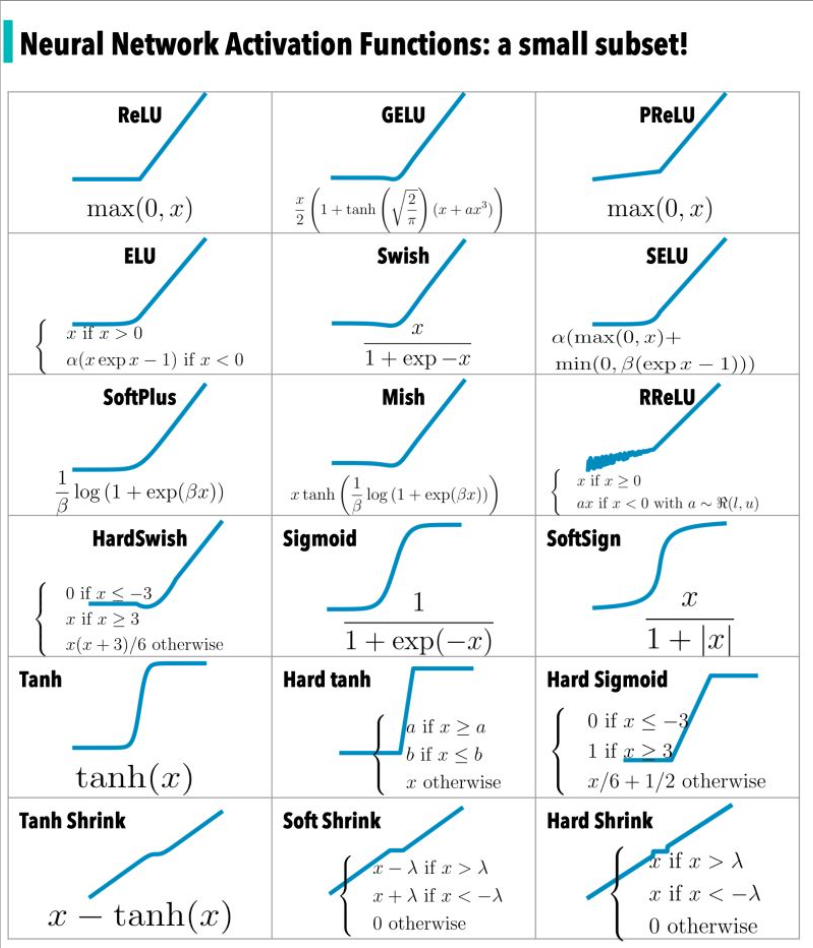

Dr. Silverman, always eager to discuss neural networks, replied, "Of course, Dr. Ingrid! Nonlinearities, or activation functions, are what make neural networks powerful. They allow networks to learn and represent complex patterns. Think of them as spices in a recipe - they add unique flavors to the dish, making it more than just a blend of ingredients."

Dr. Ingrid, intrigued, asked, "What are the different types of these nonlinearities?"

"Well," Dr. Silverman explained, "there are several types, each with its unique properties. For instance, the ReLU (Rectified Linear Unit) function is like adding a pinch of salt - it enhances the flavor without overpowering the dish. It allows positive values to pass through unchanged while blocking negative values."

Impact on Learning

"And how do these nonlinearities impact learning in CNNs?" Dr. Ingrid inquired further.

Dr. Silverman answered, "They introduce complexity and depth to the learning process. Without them, the network would be like a cookbook with only simple recipes - unable to handle complex dishes or flavors. Nonlinearities help the network learn intricate patterns, much like a chef mastering a diverse cuisine."

The Trade-Offs

Dr. Ingrid, pondering the implications, asked, "What about the trade-offs of using different nonlinearities?"

"That's an important aspect," Dr. Silverman agreed. "Each nonlinearity has its strengths and weaknesses. For example, while ReLU is simple and effective, it can sometimes lead to 'dead neurons' where certain nodes stop learning. It's like having a spice that sometimes loses its flavor."

Real-World Applications

As they discussed, Dr. Ingrid related these concepts to real-world applications. "So, in image recognition, these nonlinearities would help the CNN distinguish complex patterns in images, much like identifying subtle flavors in a complex dish?"

"Exactly," Dr. Silverman confirmed. "They enable the network to discern nuances in visual data, making accurate predictions and recognitions."

A Spark of Curiosity

Their conversation ended with Dr. Ingrid feeling enlightened and inspired, her curiosity in CNNs further ignited. "Thank you, Dr. Silverman. This has been a fascinating discussion. It's amazing how these concepts find parallels in everyday life."

Dr. Silverman chuckled, "That's the beauty of AI and neural networks, Dr. Ingrid. They're as intriguing as they are complex."

As Dr. Ingrid returned to her gift wrapping, her mind buzzed with the newfound understanding of nonlinearities in CNNs, a perfect blend of festive joy and scientific discovery.

Diving Deeper into Nonlinearities

After their initial discussion, Dr. Ingrid, still on the call, was eager to delve deeper. "Dr. Silverman, could you give me more examples of nonlinearities and guide me on when to use which?"

"Certainly," Dr. Silverman replied. "Let's explore a few more types."

Sigmoid Function

"The sigmoid function," he began, "is like a dimmer switch in a room. It takes any value and squishes it between 0 and 1. It's useful when we need to classify things in binary, like yes or no, true or false. But it's not used as much in deep networks due to its tendency to cause 'vanishing gradients,' where changes become so small they don't significantly update the weights."

Tanh Function

"Then, there's the tanh, or hyperbolic tangent function," he continued. "It's similar to sigmoid but squishes values between -1 and 1. It's like having a more balanced dimmer switch, providing a range of 'brightness' levels. It was popular before ReLU but also suffers from vanishing gradients in deep networks."

Leaky ReLU

Dr. Silverman moved on to another variation. "Leaky ReLU is a twist on the regular ReLU. Instead of blocking all negative values, it lets some small negative values pass through, like a leaky faucet. This helps in keeping the neurons active and alleviates the 'dead neuron' problem of the ReLU function."

Softmax Function

"And finally, there's the softmax function," he concluded. "It's used primarily in the output layer of classification networks. Think of it as a voting system where each neuron's output is a 'vote,' and softmax turns these votes into probabilities. It's particularly useful in multi-class classification, like determining the type of object in an image."

Choosing the Right Function

Dr. Ingrid pondered, "So, choosing the right function depends on the specific task and the network's depth?"

"Exactly," Dr. Silverman affirmed. "It's about understanding your network's needs and the problem you're trying to solve. No single function fits all scenarios. It's a bit like choosing the right seasoning for a dish – it depends on the ingredients and the desired flavor."

Their conversation ended with Dr. Ingrid feeling more confident about her understanding of CNNs and nonlinearities. "Thank you, Dr. Silverman, for this insightful discussion. It's like opening a box of assorted chocolates; each nonlinearity has its unique flavor and use!"

Dr. Silverman chuckled, "Well said, Dr. Ingrid. The world of neural networks is indeed rich with choices and possibilities."

As they hung up, Dr. Ingrid gazed at her array of wrapped Christmas gifts, her mind buzzing with ideas for her own research, inspired by the diverse world of nonlinear functions in CNNs.

Enjoyed unraveling the mysteries of AI with Everyday Stories? Share this gem with friends and family who'd love a jargon-free journey into the world of artificial intelligence!

Read the entire series: http://everydayseries.com/advent-calendar/