In the world of artificial intelligence, LLAMA 2 stands as a remarkable continuation of the LLaMA1 formula. This latest model incorporates significant technical expansions, encompassing data quality improvements, advanced training techniques, comprehensive evaluation of capabilities, safety training, and responsible releases. In a time when research sharing is at an all-time low and regulatory capture looms over the AI landscape, LLAMA 2 is a pivotal development within the LLM ecosystem.

Image Credits: Meta

Enhanced Technical Features:

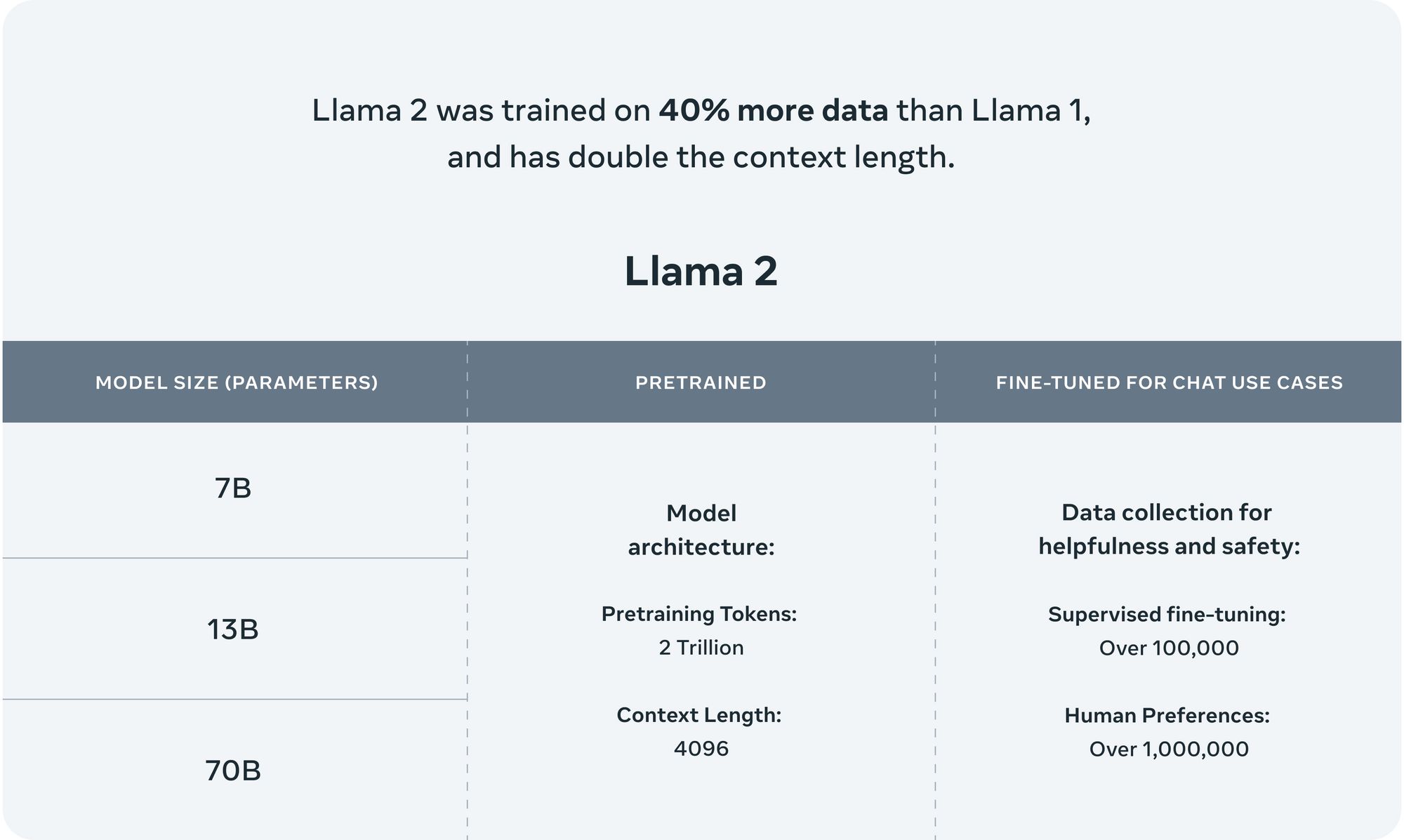

LLAMA 2 builds upon its predecessor by introducing substantial technical advancements. The technical research paper provides comprehensive details on various areas that have undergone improvement. Meta, the organization behind LLAMA 2, has notably increased the size of the pretraining corpus by 40% and doubled the model's context length to 4k. Additionally, the adoption of grouped-query attention, as presented by Ainslie et al. in 2023, further elevates the model's capabilities.

Image Credits: Meta

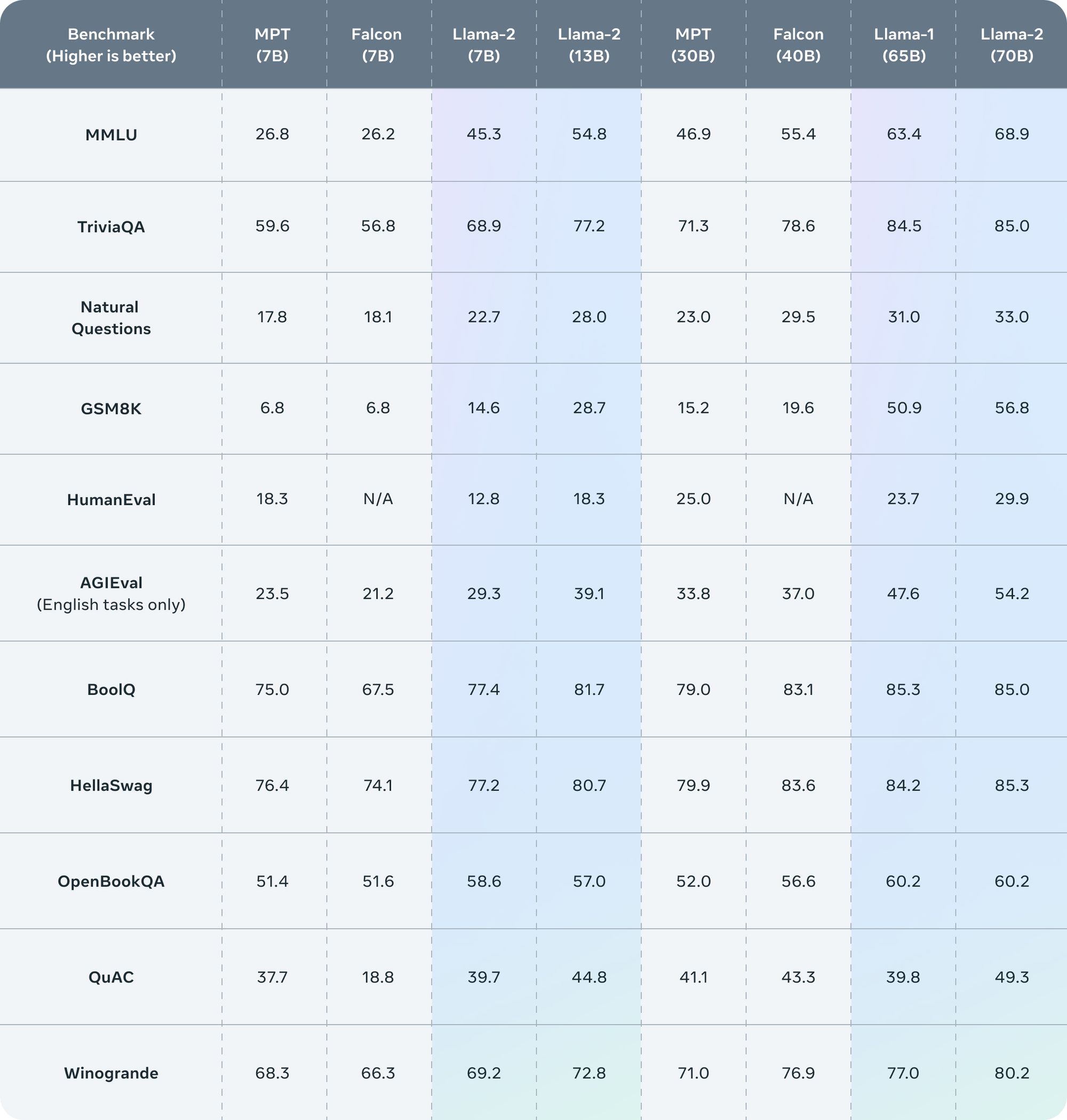

Benchmarks: Llama 2 outperforms other open-source language models on many external benchmarks, including reasoning, coding, proficiency, and knowledge tests.

Unparalleled Capabilities:

Extensive benchmarking and rigorous analysis has led to the realization that LLAMA 2's base model surpasses even GPT3 in terms of strength. Furthermore, the fine-tuned chat models demonstrate performance on par with ChatGPT. This breakthrough represents a significant stride forward for open-source models, posing a substantial challenge to closed-source providers. LLAMA 2 offers superior customizability and reduced costs, making it an attractive option for numerous companies.

Considerable Investment and Commitment:

The development of LLAMA 2 was accompanied by extensive budgets and unwavering commitment. Estimates suggest that approximately $25 million was invested in preference data alone, assuming market rates. Such a substantial investment underscores the immense resources required to create a general model of this magnitude.

Meta Organization and Structural Changes:

Observations indicate that Meta AI, the organization behind LLAMA 2, has undergone organizational changes. It appears to operate independently from Yann Lecun and the original FAIR (Facebook AI Research) team, signaling Meta's growing influence and distinct approach within the AI domain.

Focus on Multi-Turn Consistency:

LLAMA 2 introduces a novel method called Ghost Attention (GAtt) for achieving multi-turn consistency. Inspired by Context Distillation, this technique represents an interim solution to enhance model performance until further advancements in training methods are made.

Safety and Responsible Development:

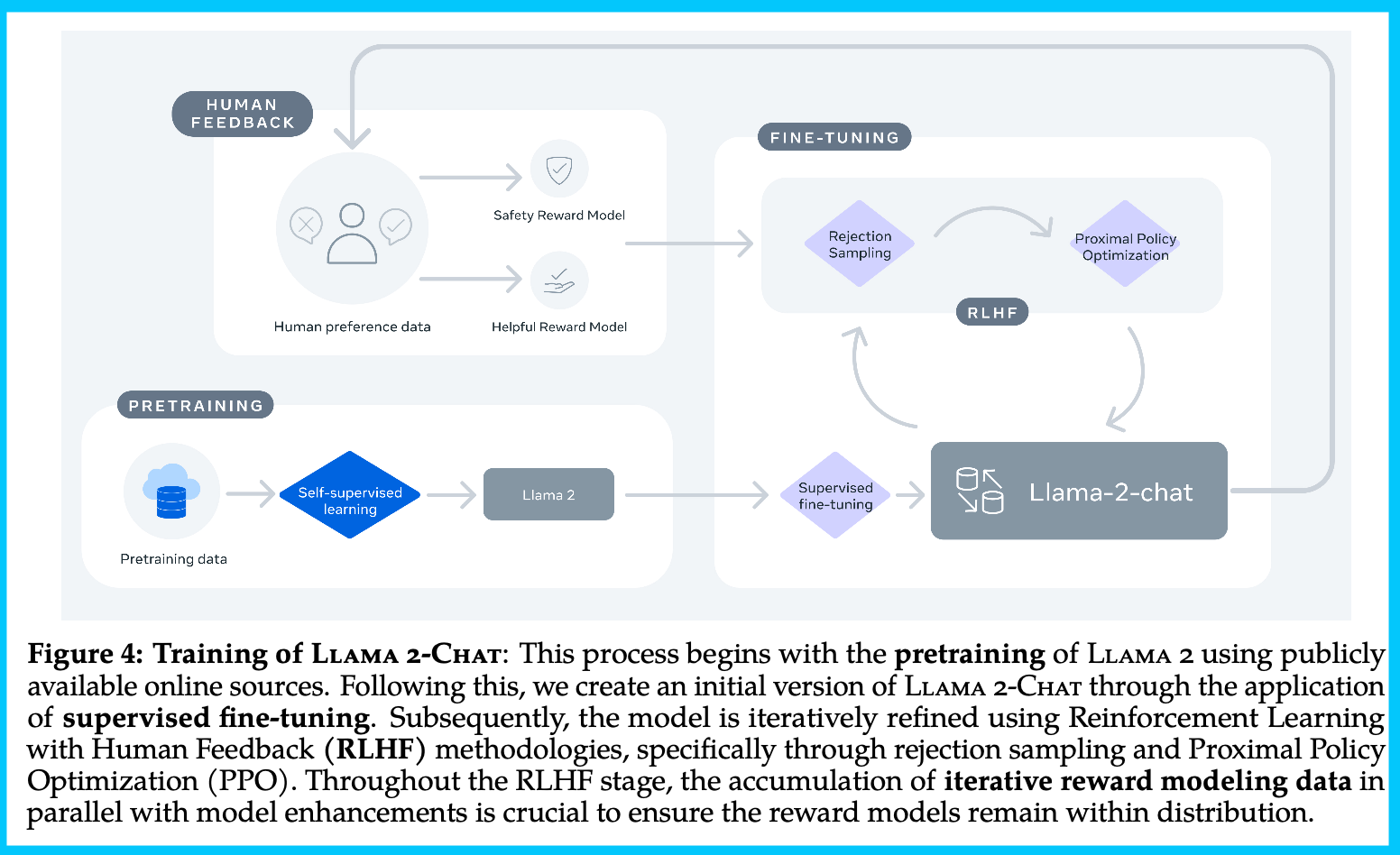

Safety evaluation takes a prominent role in LLAMA 2, occupying nearly half of the technical research paper. Detailed examinations of context distillation and reinforcement learning from human feedback (RLHF) are conducted to ensure safe and responsible model behavior. While the results are not flawless and limitations remain, LLAMA 2 represents a step in the right direction towards addressing safety concerns.

Commercial Availability and Licensing:

LLAMA 2 is available for commercial use, except for products that boast a user base of over 700 million monthly active users. Interested parties can access the model by submitting a form, which also grants permission to download the model from the HuggingFace hub. Further information regarding licensing can be found in the "LLama 2 Community License Agreement" mentioned within the download form.

Philosophy: The LLAMA 2 paper reveals Meta's dedication to its goals and the distinctive position it holds within the AI landscape. Meta's organizational dynamics and its commitment to trust, accountability, and the democratization of AI through open-source solutions are evident. Notably, the notion of democratization is intriguing, considering the existing power imbalances in AI development and utilization. The paper also hints at potential political and practical considerations, such as the collection of publicly available online sources, potentially to acquire data before certain platforms restrict access.

Base Model: LLAMA 2 retains a similar architecture to its predecessor, with notable improvements in data and training processes. The expansion of context length and the implementation of grouped-query attention (GQA) enhance the model's usability and inference speed. However, specific details regarding data sources, particularly those containing personal information, remain undisclosed, leaving room for further investigation.

RLHF and Fine-Tuning: The RLHF process plays a crucial role in enhancing LLAMA 2's performance. Meta leverages reinforcement learning techniques, such as Proximal Policy Optimization (PPO) and Rejection Sampling (RS) fine-tuning, to refine the model. Fine-tuning iterations, coupled with progressively improved reward models, contribute to the model's overall development. The paper emphasizes the importance of data quality and the synergy between humans and language models in achieving successful RLHF outcomes.

Meta iteratively trains 5 RLHF versions with progressing data distributions

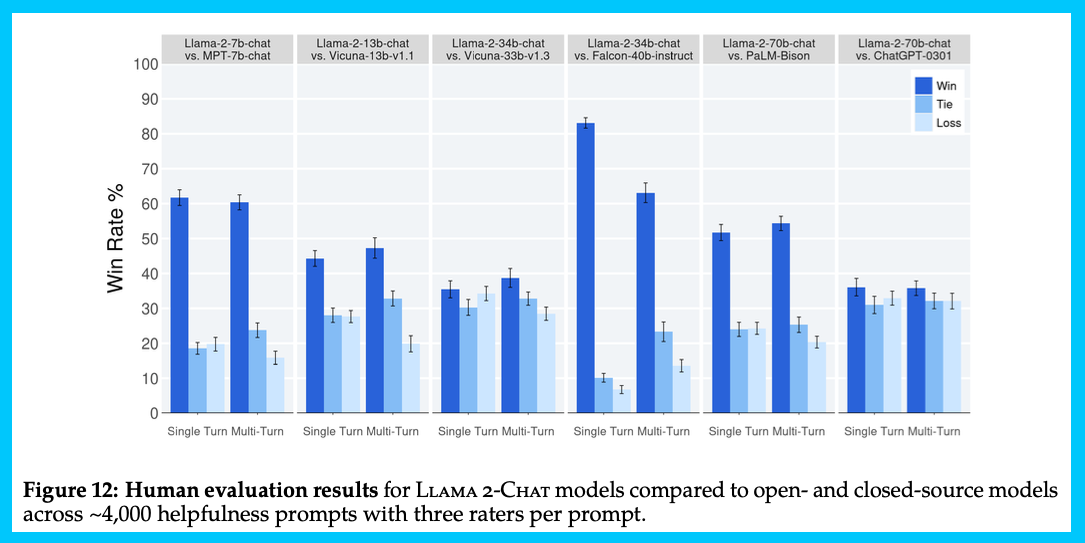

Evaluation and Capabilities: LLAMA 2 undergoes comprehensive evaluation, demonstrating its prowess across various benchmarks. The model excels in automatic benchmarks, surpassing other open-source models. Human evaluations and LLM-as-a-judge evaluations further highlight LLAMA 2's capabilities. However, it is worth noting that evaluating models in terms of safety, bias, and other ethical considerations remains an ongoing challenge.

Safety Considerations: Meta places significant emphasis on safety, dedicating a substantial portion of the paper to safety evaluations. While LLAMA 2 showcases notable progress in ensuring safety, including extensive evaluations and the incorporation of safety metadata, the results are not without limitations. Continued scrutiny and further exploration are necessary to address potential safety concerns effectively.

Licensing: LLAMA 2 is available for commercial use, with specific conditions for products or services catering to a large user base. Meta's licensing terms ensure that LLAMA 2 remains accessible to a wide range of users while also protecting Meta's interests.

LLAMA 2's arrival marks a significant milestone in the realm of technical progress. Meta's confirmation of various techniques, previously only rumored by competitors, demonstrates their commitment to advancing the field of AI. While the social impacts of LLAMA 2 are yet to be fully explored, future predictions and analyses will be shared in upcoming posts. Stay tuned by subscribing to receive further insights on this groundbreaking development.

Read More:

We research, curate and publish daily updates from the field of AI. Paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events and open-source tools.

Consider becoming a paying subscriber to get the latest!