History of linguistics

“Those who cannot remember the past are condemned to repeat it.” - George Santayana

“Those who cannot remember the past are condemned to repeat it.” - George Santayana

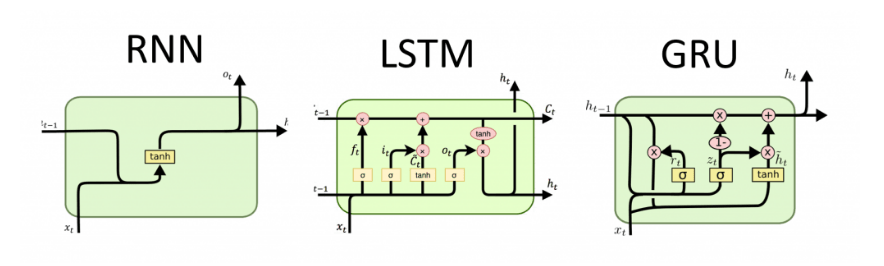

A Gated Recurrent Unit (GRU) is a neural network that can learn long-term dependencies. It is similar to an LSTM, but does not have a memory cell.

The long-term dependencies problem is made up of three sub-problems: parsing, learning, and solving.

Something developers are not telling you.

![LSTM Network [RNNs]](/content/images/size/w600/2022/07/Unknown.png)

Long Short Term Memory (LSTM) has been a hot topic in the field of natural language processing (NLP) for several years now.

Exploding and vanishing gradients are two of the most important concepts in modern mathematics. A gradient is a measure of how quickly one variable changes with respect to another.

There is a lot of talk about bias in artificial intelligence (AI) models. But what does that mean? And why should we care?

fastText is a library for efficient learning of word representations and sentence vectors. It was developed at Facebook AI Research (FAIR).