SeamlessM4T: Bridging Language Gaps with Multimodal Translation

The Power of Communication in a Multilingual World

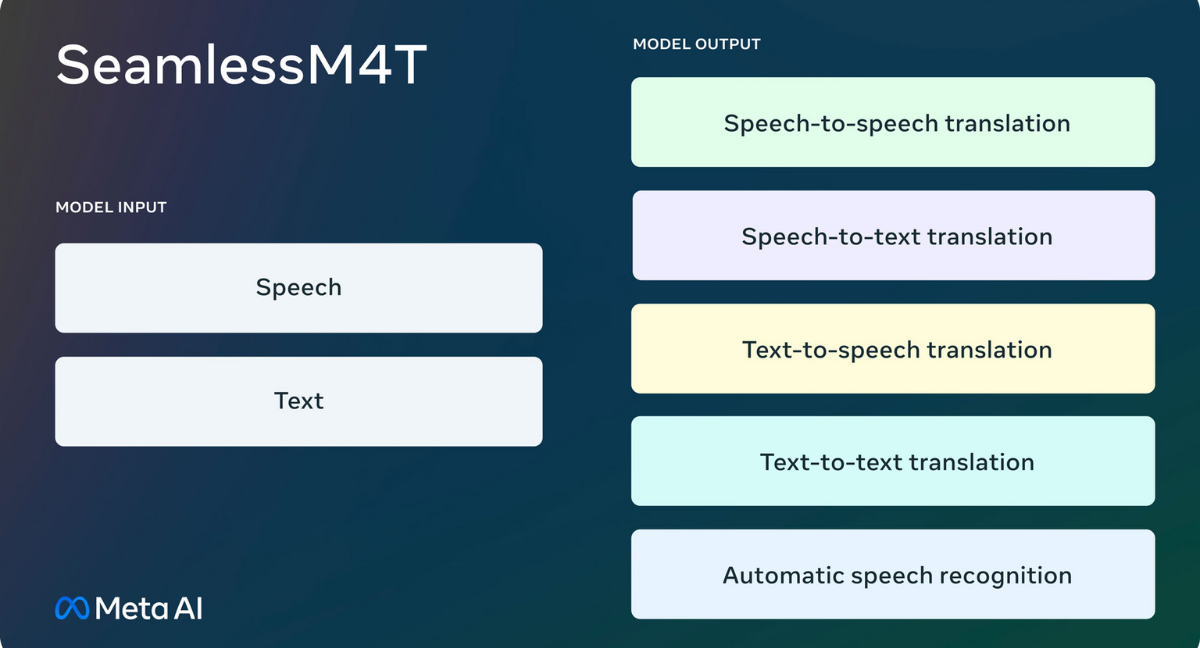

In a remarkable stride towards breaking down language barriers, a multimodal model called SeamlessM4T (Massive Multilingual Multimodal Machine Translation) has emerged on the horizon. This remarkable innovation marks a watershed moment in the world of speech-to-speech and speech-to-text translation and transcription. Released to the public under a CC BY-NC 4.0 license, SeamlessM4T boasts an impressive array of features.

It supports input in nearly 100 languages, encompassing both speech and text and offers output in 100 languages for text and 35 languages (in addition to English) for speech.

SeamlessM4T owes its prowess to a fusion of insights and capabilities drawn from Meta's No Language Left Behind (NLLB), Universal Speech Translator, and Massively Multilingual Speech advances, all encapsulated within a single model.

The Model That Bridges Divides

Traditional translation systems have long grappled with two significant shortcomings: limited language coverage, which forms a barrier to multilingual communication, and a reliance on multiple models that often lead to translation errors, delays, and deployment complexities.

SeamlessM4T sets out to conquer these challenges with its expansive language coverage, remarkable accuracy, and all-in-one model capabilities. These strides herald a new era of effortless communication between individuals from diverse linguistic backgrounds and offer a translation tool that is not only powerful but also user-friendly.

A Revolution in Multilingual Speech Generation

SeamlessM4T stands as the pioneer in direct speech-to-speech translation, many-to-many style. On the input side, it accommodates up to 100 languages, flexibly adapting to the task at hand. Additionally, it possesses the unique ability to identify source languages without relying on a separate language identification model. Moreover, as a unified model, it reduces latency when compared to cascaded systems, ensuring a smoother user experience.

Unmatched Translation Quality

Quality is the hallmark of SeamlessM4T's translation capabilities, achieving a state-of-the-art status for speech translation across various lengths of audio and text. This exceptional performance is underpinned by Fairseq2, a cutting-edge modeling toolkit designed from the ground up with speed and user-friendliness in mind.

The Power of Multimodal Data

SeamlessM4T leverages the vast SeamlessAlign corpus, the largest open dataset for multimodal translation to date, boasting a staggering 470,000 hours of content. This breakthrough in multimodal data mining was achieved through the deployment of SONAR, a state-of-the-art sentence embedding space for both speech and text.

Putting it to the Test

SeamlessM4T has undergone comprehensive evaluation across all supported languages, employing both automatic metrics (ASR-BLEU, BLASER 2) and human evaluation. It was also subjected to rigorous testing for robustness, bias, and toxicity, where it outperformed previous state-of-the-art models by a significant margin.

How SeamlessM4T Operates

SeamlessM4T is a dual-component system comprising encoders and decoders. The text and speech encoders have the capability to recognize speech input in 100 languages, while the decoders are responsible for translating that meaning into nearly 100 languages for text and 35 languages (along with English) for speech.

Deciphering Speech

The unsupervised speech encoder is a marvel in itself, learning to extract structure and meaning from speech by analyzing millions of hours of multilingual speech. It breaks down the audio signal into segments, each representing a selection of sounds that constitute human language, thereby constructing an internal representation of the spoken words. To map these segments to actual words, a length adaptor is employed.

Processing Text

The text encoder, rooted in the NLLB model, is proficient in comprehending text in 100 languages and generating representations that are instrumental for translation tasks.

Generating Text

The decoder is trained to take sequences of written words and speech units and translate them into text. This functionality can be applied to tasks in the same language, such as speech recognition, as well as multilingual translation tasks. Through multitask training, the model leverages the strengths of the robust text-to-text NLLB translation model to guide its speech-to-text translation component via token-level knowledge distillation.

Creating Speech

The model employs acoustic units to represent speech on the target side. The text-to-unit component in the UnitY model generates these discrete speech units based on the text output, having been pre-trained on ASR data prior to UnitY fine-tuning. A multilingual unit HiFi-GAN vocoder then transforms these discrete units into audio waveforms.

SeamlessM4T is a testament to the relentless pursuit of overcoming linguistic barriers, opening doors to seamless communication and understanding in a multilingual world. With its exceptional capabilities, this multimodal translation model is poised to revolutionize the way we interact and collaborate across languages, truly taking us another step forward in removing language barriers.

Read More:

We research, curate, and publish daily updates from the field of AI. A paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events, and open-source tools.

Consider becoming a paying subscriber to get the latest!

No spam, no sharing to third party. Only you and me.