Day 9 - Neural Network contd

On the 20th of December, with Christmas just around the corner, Dr. Silverman and Dr. Ingrid found themselves sipping coffee in the bustling common market of the village. The air was festive, with the scent of pine and gingerbread lingering.

"I've been curious about neural network optimization," Dr. Ingrid began, stirring her coffee. "How do we make these networks more efficient?"

Eva Joins the Conversation

As they delved into the intricacies of optimization, Eva, along with her parents, approached them. "Dr. Silverman, Dr. Ingrid!" she exclaimed. "I just learned about neural networks in school."

"Perfect timing, Eva!" Dr. Silverman smiled. "Let's start with the basics, shall we?"

Perceptron and Layers

"Imagine a neural network as a complex web," Dr. Silverman started. "At its simplest form, we have a perceptron, like a single decision-making neuron. It takes inputs, processes them, and gives an output."

"And layers?" Eva asked.

"Layers are like different levels in a game," Dr. Ingrid added. "Each layer has multiple perceptrons, and they get more complex as you go deeper. The first layer takes the initial input, and the last layer gives the final output."

Hidden Layers

"What about hidden layers?" Eva questioned.

"Hidden layers are the levels between the input and output," Dr. Silverman explained. "They're where most of the processing happens. Think of them as the kitchen in a restaurant, where the raw ingredients (input) are turned into dishes (output)."

One-Hot Encoding

"And one-hot encoding?" Eva continued.

Dr. Ingrid smiled. "It's a way to represent words or categories as vectors. Like marking your favorite dishes on a menu – '1' for what you like and '0' for what you don't."

Neural Network Optimization - Advanced Discussion with Dr. Ingrid

Optimization Techniques

"Optimization is about making these networks learn better and faster," Dr. Silverman turned to Dr. Ingrid. "It involves techniques like gradient descent, where we adjust the weights in the network to reduce errors in prediction."

Overfitting and Regularization

Dr. Ingrid nodded, "And avoiding overfitting?"

"Exactly," Dr. Silverman replied. "Overfitting is like memorizing answers without understanding. Regularization is a technique to prevent this, ensuring the network learns the concept, not just the examples."

Learning Rate and Batch Size

"And the learning rate?" Dr. Ingrid inquired.

"It's the step size the network takes while learning," Dr. Silverman answered. "Too big, and it might miss the optimal solution. Too small, and the learning is slow."

"And batch size?"

"It's the number of examples the network sees before updating its weights. Like tasting a few dishes before deciding to change the recipe," Dr. Silverman concluded.

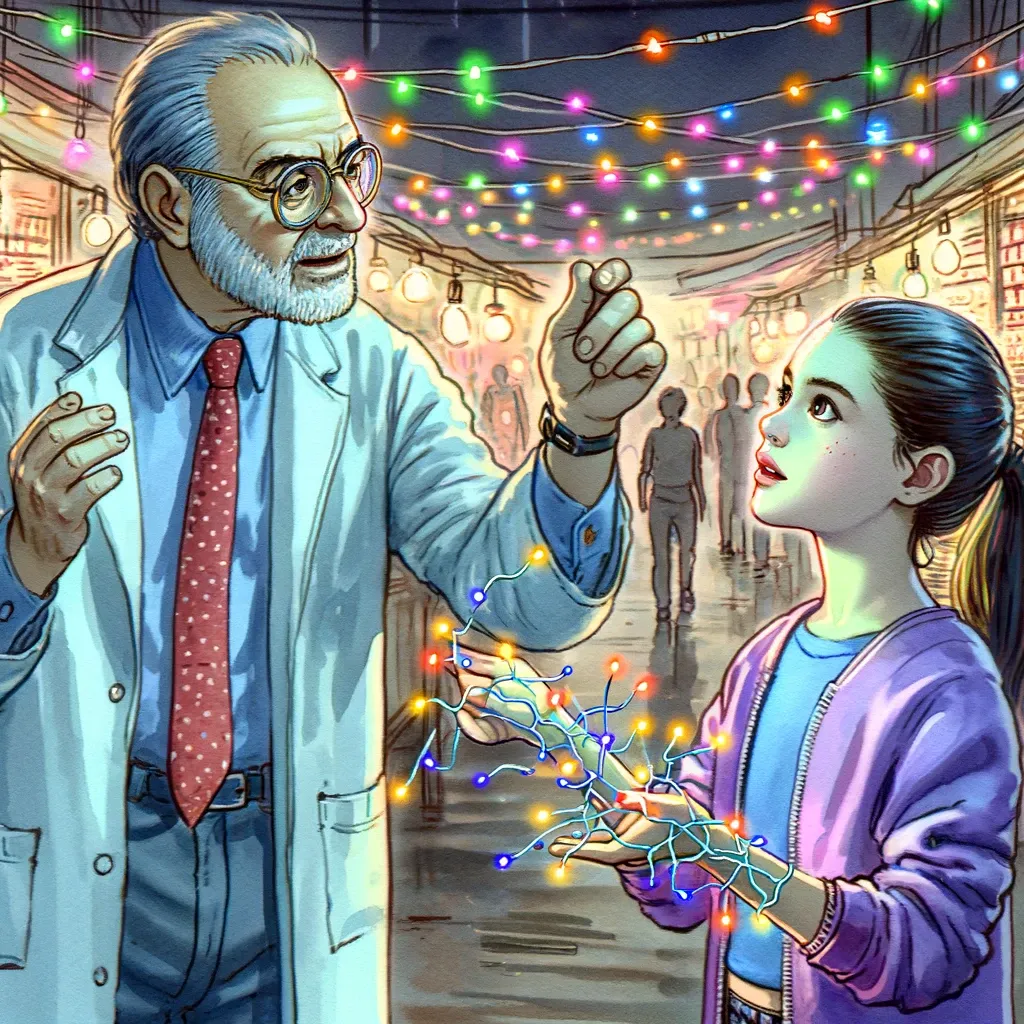

A Fusion of Learning

As they talked, the market buzzed around them. Eva listened, fascinated, while her parents and Dr. Ingrid engaged in the deeper aspects of neural networks. The conversation was a fusion of basic learning and advanced understanding, reflecting the diverse beauty of artificial intelligence.

As the evening settled in, the lights of the market glowed warmly. They parted ways, each lost in thought about the wonders of neural networks. Eva was excited about her school project, Dr. Ingrid pondered optimization strategies, and Dr. Silverman smiled, pleased with the fruitful exchange of knowledge.

Their discussion had not only been a celebration of learning but also a perfect prelude to the joy and anticipation of the upcoming Christmas.

Enjoyed unraveling the mysteries of AI with Everyday Stories? Share this gem with friends and family who'd love a jargon-free journey into the world of artificial intelligence!

No spam, no sharing to third party. Only you and me.