InstructPix2Pix

Brooks, T., Holynski, A. and Efros, A.A., 2022. InstructPix2Pix: Learning to follow image editing instructions. arXiv preprint arXiv:2211.09800.

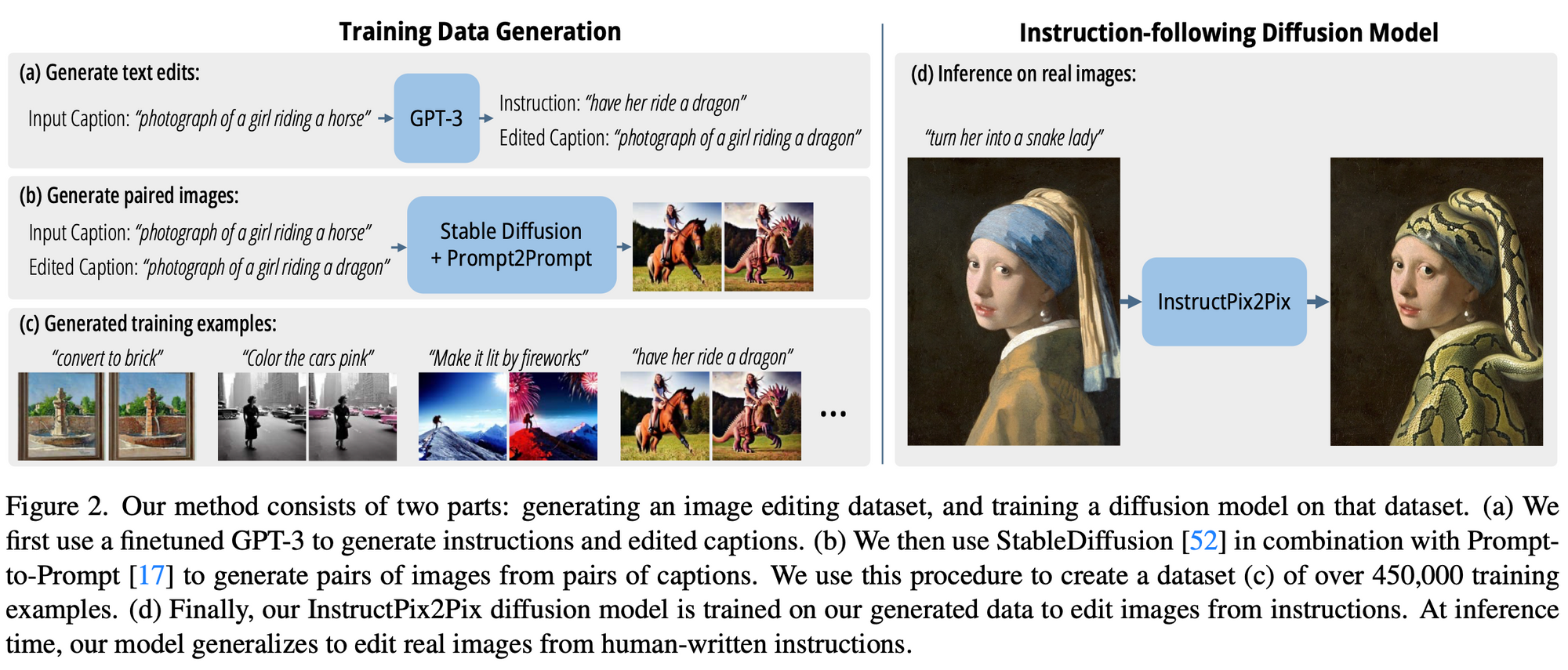

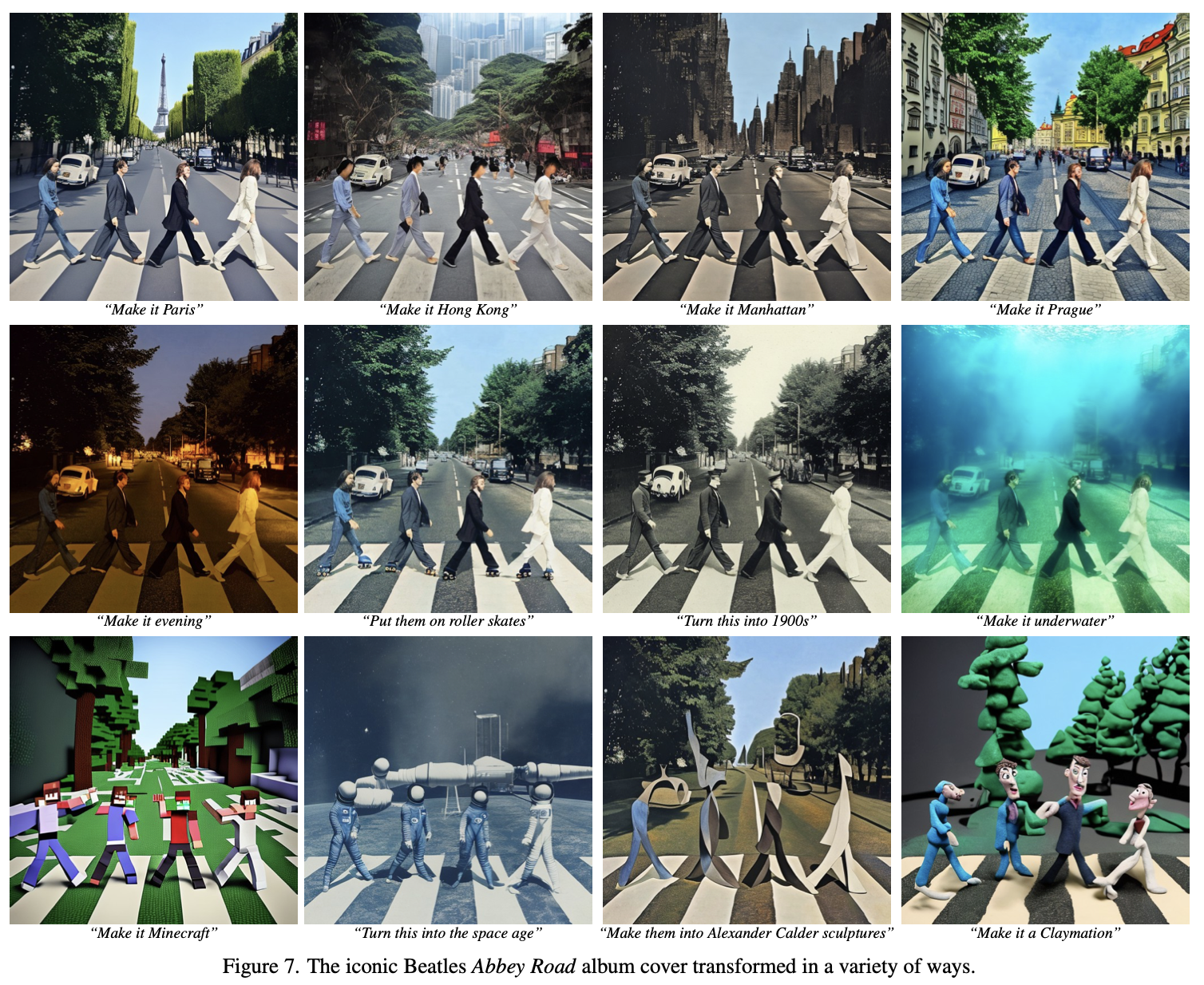

The authors are proposing a method for editing images based on written instructions. They generate a dataset of image editing examples by combining the knowledge of a language model (GPT-3) and a text-to-image model (Stable Diffusion). They train their model called InstructPix2Pix on the generated data and show that it can generalize to real images and user-written instructions during inference. The model performs image editing quickly, in just a few seconds, and does not require fine-tuning for each individual example or inversion. The authors demonstrate successful image editing results for a range of input images and instructions.

The author is introducing a method for teaching a generative model to follow human-written instructions for image editing. To generate a large dataset for this task, they combine the knowledge of a language model (GPT-3) and a text-to-image model (Stable Diffusion) to create paired training data. The author's model, a conditional diffusion model, takes an input image and text instruction as input and generates the edited image.

This model performs the image edit in the forward pass and does not require any additional information or fine-tuning.

The author demonstrates that the model can generalize to real images and natural human-written instructions, even though it was trained entirely on synthetic data. The model enables image editing based on human instructions to perform various types of edits, including replacing objects, changing styles, settings, and artistic mediums.

Process:

Sample output:

We research, curate and publish daily updates from the field of AI.

Consider becoming a paying subscriber to get the latest!

No spam, no sharing to third party. Only you and me.