GPT to GPT-4: All you wanted to know about them

The development of Generative Pre-trained Transformer (GPT) language models by OpenAI has revolutionized the field of natural language processing (NLP). As the models evolved from GPT to GPT-4, their complexity and capabilities have increased significantly. In this post, we will take a deep dive into the parameters of the GPT series, examining the growth and implications of these powerful language models.

Topic touched upon:

- The Transformer Architecture

- GPT: The Beginning

- GPT-2: Doubling Down on Parameters

- GPT-3: The Parameter Giant

- GPT-4: The Mysterious Future

- ChatGPT: Conversational AI at its Best

- GPT-4 Implications: Opportunities and Challenges

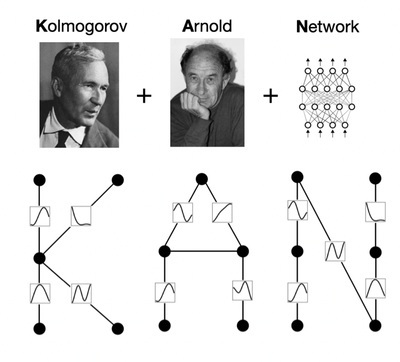

The Transformer Architecture

The GPT series is built on the Transformer architecture, which has become the go-to model for NLP tasks. Transformer models consist of layers that include self-attention and feed-forward elements, with each layer having its own set of parameters. The number of parameters in a Transformer model is crucial to its performance and is calculated by multiplying several factors:

Subscribe to continue reading