Foundation Models and the EU AI Act

Assessing Compliance and Recommending Transparency

Foundation models have revolutionized the field of artificial intelligence, shaping various aspects of our society. These models, like ChatGPT developed by OpenAI, possess remarkable capabilities that enable them to understand and generate human-like text. However, their deployment has not been without its challenges. From serious risks to rapid deployment, foundation models have attracted unprecedented adoption and sparked unending controversy.

Amidst this transformative landscape, the European Union (EU) is taking a significant step forward by finalizing its AI Act, which will be the world's first comprehensive regulation governing AI.

Just yesterday, the European Parliament adopted a draft of the Act with an overwhelming majority of 499 in favor, 28 against, and 93 abstentions. Notably, this Act includes explicit obligations for foundation model providers, including major players like OpenAI and Google.

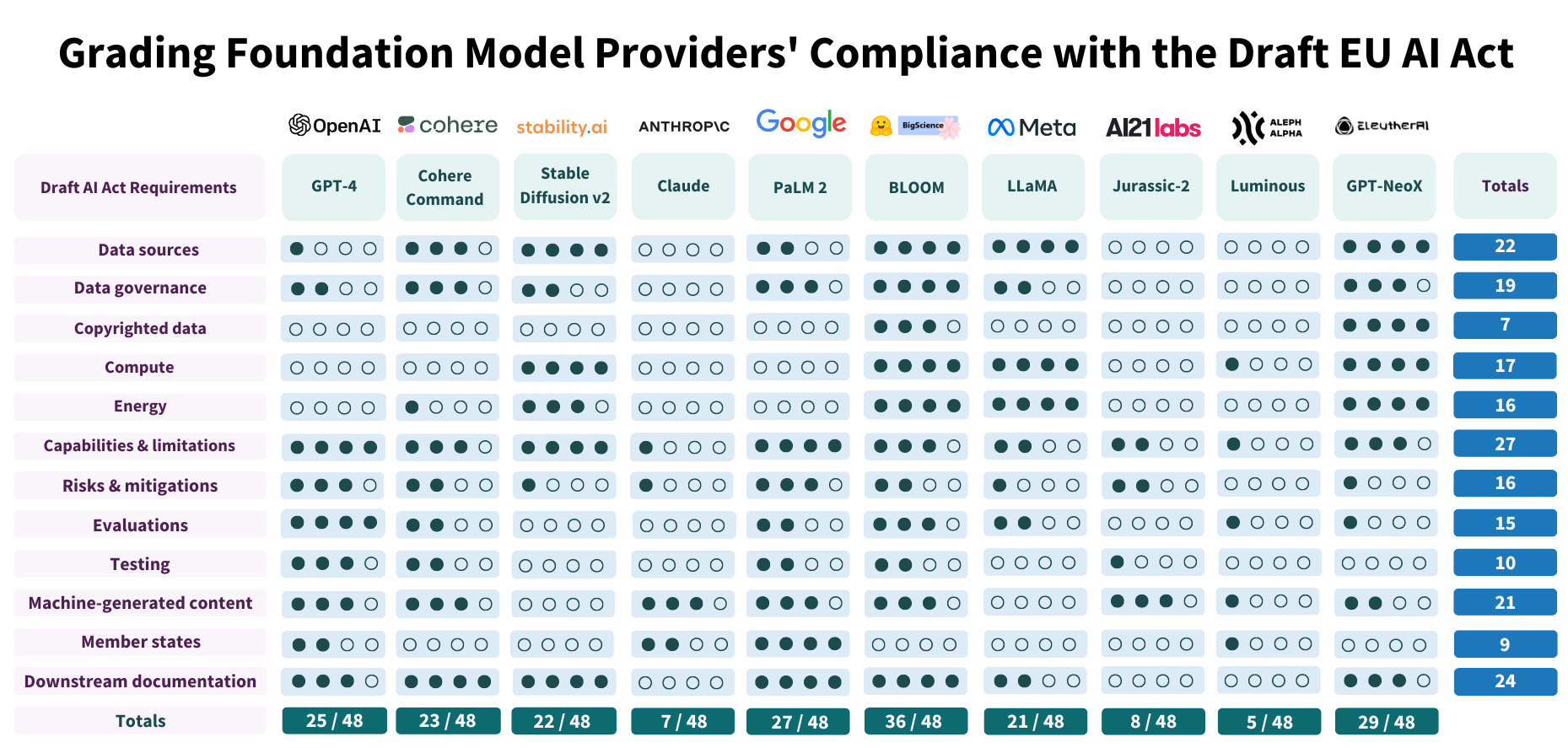

In this blog post, we delve into the compliance of major foundation model providers with the draft requirements of the EU AI Act. The evaluation reveals that these providers largely fall short of meeting these requirements. They often fail to disclose sufficient information about the data, computing infrastructure, and deployment aspects of their models. Key characteristics of the models themselves also remain undisclosed.

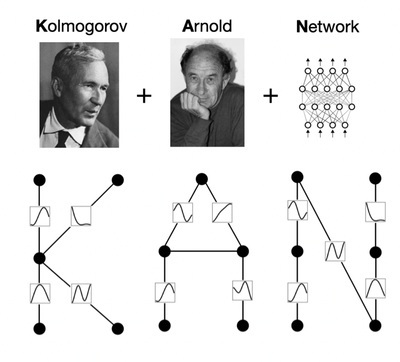

Figure 1. Assessment of 10 major foundation model providers (and their flagship models) for the 12 AI Act requirements on a scale from 0 (worst) to 4 (best). The best possible score is 48 as a result.

Image Credits: Stanford University: Stanford researchers evaluate foundation model providers like OpenAI and Google for their compliance with proposed EU law on AI.

To be specific, the providers do not adequately address the use of copyrighted training data, the hardware used and emissions produced during training, and the evaluation and testing processes.

As a result, we emphasize the need for policymakers to prioritize transparency, guided by the requirements set forth in the AI Act. By enhancing transparency, the entire ecosystem can benefit from improved accountability.

Motivation: Foundation Models and the EU AI Act

Foundation models have become central to the global conversation on AI, with far-reaching impacts on the economy, policy, and society. Concurrently, the EU AI Act represents a crucial regulatory initiative in the field of AI worldwide.

Once enacted and enforced, this Act will not only establish requirements for AI within the EU's population of 450 million people but also set a precedent for AI regulation globally—a phenomenon known as the "Brussels effect."

Policymakers worldwide are already drawing inspiration from the AI Act, and multinational companies may adjust their global practices to conform to a unified AI development process. Therefore, how we regulate foundation models will shape the broader digital supply chain and influence the societal impact of this technology.

The assessment aims to establish the facts about the current conduct of foundation model providers and drive future intervention.

Status Quo: Evaluating Compliance and Anticipating Change

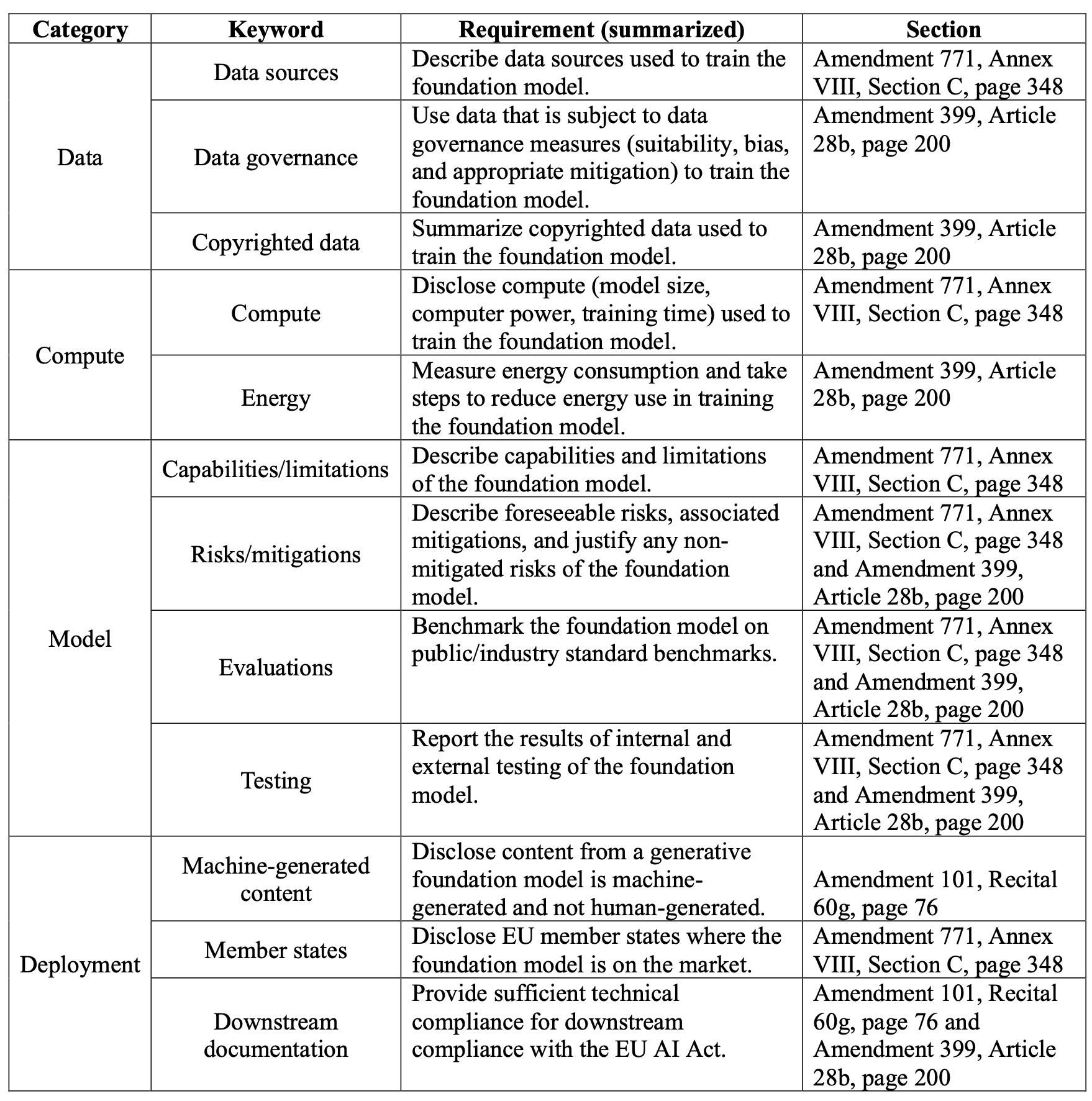

Table 1. We identify, categorize, summarize, and source requirements from the draft AI Act adopted by EU Parliament. (Source: Stanford University Report)

In this evaluation, the conduct of foundation model providers is closely examined, and their compliance with the draft requirements of the EU AI Act is assessed. The focus is on the areas where providers currently fall short, providing insights into how the AI Act, if enacted, obeyed, and enforced, will transform the status quo. Among the requirements that providers struggle to fulfill are:

Addressing Copyright Issues:

One of the persistent challenges in the foundation model ecosystem is the unclear liability due to copyright. Many foundation model providers do not disclose information about the copyright status of their training data. As a result, there is a lack of clarity on whether training on copyrighted data falls under fair use and what the implications are for reproducing this data.

To ensure compliance with the EU AI Act, legislators, regulators, and courts need to provide clear guidelines on copyright in relation to the training procedure and the output of generative models. This includes defining conditions under which copyright or licenses must be respected during training and measures that model providers should take to reduce the risk of copyright infringement.

Improving Reporting of Compute/Energy Usage:

Foundation model providers inconsistently report energy usage, emissions, and their strategies for measuring and mitigating emissions. The measurement of the energy required to train foundation models is still a topic of debate. However, regardless of the method used, the reporting of these costs needs improvement.

Efforts should be made to standardize reporting practices and develop tools that facilitate accurate measurement and disclosure of energy consumption. Policymakers can play a crucial role in encouraging and incentivizing foundation model providers to transparently report their compute and energy usage.

Enhancing Risk Mitigation Disclosure:

The risk landscape associated with foundation models is vast and includes potential malicious use, unintentional harm, and structural or systemic risks. While many foundation model providers mention these risks, they often fail to disclose the mitigations they have implemented and their efficacy.

The EU AI Act requires providers to describe both mitigated and non-mitigated risks, including explanations for risks that cannot be mitigated. Currently, none of the assessed providers fully comply with this requirement.

To improve accountability and transparency, foundation model providers should provide detailed information about the specific measures they have taken to mitigate risks and their effectiveness.

Establishing Evaluation Standards and Auditing Ecosystem:

Foundation model providers rarely measure models' performance in terms of intentional harms, robustness, calibration, and other important factors. While there is a growing call for more evaluations, especially beyond language models, evaluation standards for foundation models are still a work in progress.

The EU AI Act emphasizes the need for performance disclosure, and policymakers should collaborate with organizations like the National Institute of Standards and Technology (NIST) to establish evaluation standards and create AI testbeds. These efforts will help ensure consistent and reliable evaluation of foundation models' performance and facilitate informed decision-making.

Open vs. Restricted/Closed Models: The Compliance Dichotomy

Another important aspect highlighted in the assessment is the compliance dichotomy based on the release strategy of foundation model providers.

The assessment distinguishes between broadly open releases (e.g., EleutherAI's GPT-NeoX, Hugging Face/BigScience's BLOOM, Meta's LLaMA) and restricted/closed releases (e.g., Google's PaLM 2, OpenAI's GPT-4, Anthropic's Claude).

Open releases generally score well on resource disclosure requirements, while restricted/closed releases tend to perform better on deployment-related requirements. This dichotomy suggests that finding the right balance between openness and control is crucial for compliance and accountability.

Feasibility of Compliance and the Need for Improvement

The assessment concludes that no foundation model provider currently achieves a perfect score, indicating room for improvement across the board. However, the study also highlights that it is feasible for organizations to fully comply with the requirements of the EU AI Act.

Although compliance may require changes in conduct and practices, the report suggests that many providers could reach high compliance scores through meaningful and plausible adjustments.

It emphasizes transparency and disclosure of information related to data, compute, limitations, risks, and evaluations are key areas that need improvement.

By addressing these challenges and enhancing compliance with the EU AI Act, foundation model providers can ensure responsible and accountable use of AI technology.

To achieve these improvements, collaboration between policymakers, regulators, researchers, and foundation model providers is essential. Policymakers should work towards establishing clear guidelines on copyright, incentivizing transparent reporting of compute and energy usage, and defining evaluation standards for foundation models.

Regulators can play a crucial role in enforcing compliance with the EU AI Act and conducting audits to ensure adherence to the requirements. Researchers and foundation model providers should actively engage in addressing the identified challenges, disclosing mitigation strategies for risks, and improving evaluation methodologies.

In addition, industry-wide initiatives and collaborations can drive progress in the right direction. Organizations like the Partnership on AI, OpenAI, and other relevant stakeholders can come together to develop best practices, share knowledge, and establish industry standards for responsible foundation model development and deployment. These collaborative efforts can help foster innovation while ensuring the ethical and accountable use of AI technology.

Overall, the assessment highlights the need for continuous improvement and emphasizes the importance of transparency, accountability, and compliance in the development and deployment of foundation models. By addressing the persistent challenges and striving for higher standards, the AI community can maximize the benefits of foundation models while minimizing potential risks and ensuring the long-term trust and acceptance of AI technology.

We research, curate and publish daily updates from the field of AI. Paid subscription gives you access to paid articles, a platform to build your own generative AI tools, invitations to closed events and open-source tools.

Consider becoming a paying subscriber to get the latest!

No spam, no sharing to third party. Only you and me.