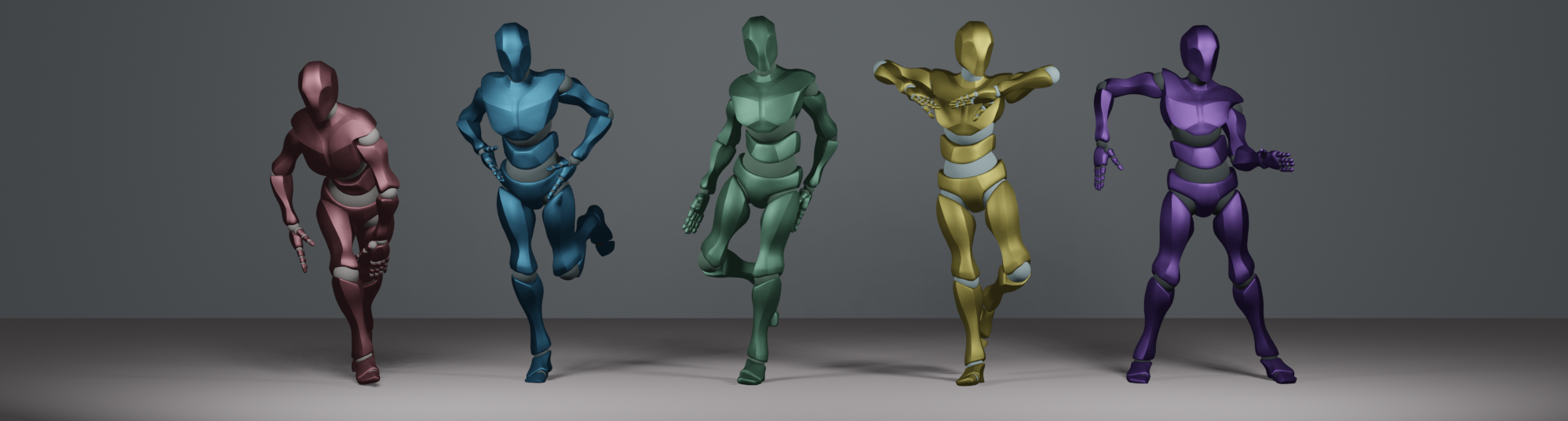

Edge - Editable Dance Generation from Music

People love to dance for many different reasons. Whether it's the joy of moving to the music, the release of endorphins, or the shared experience of being in a group, dancing is a universally loved activity. It's a part of our culture and is used in many animated movies. Now, thanks to artificial intelligence (AI), we can now generate sequences automatically to be used in many animation applications.

EDGE (Editable Dance GEneration) is a method for editable dance generation that is capable of creating realistic, physically-plausible dances while remaining faithful to arbitrary input music. EDGE uses a transformer-based diffusion model paired with Jukebox, a strong music feature extractor, and confers powerful editing capabilities well-suited to dance, including joint-wise conditioning, motion in-between, and dance continuation.

Edge uses the powerful Jukebox model to gain a broad understanding of music and produce high-quality dances.

It is important to note that although EDGE has been trained on clips of five seconds, it is capable of generating dances of any length by imposing a temporal constraint on batches of sequences.

Do you want to work with us on any of these problems?

We work on similar exciting problems, sometimes for fun and sometimes with commercial aspects. If you have ideas or want to explore these fields with us, please get in touch with us or reply to this email.

No spam, no sharing to third party. Only you and me.